The Rise of Cloud Coding Agents

What it’s actually like to work with today’s leading agents such as Devin, Codex, and Cursor

Welcome to another post in the AI Coding Series, where I'll share the strategies and insights I've developed for effective AI-assisted coding.

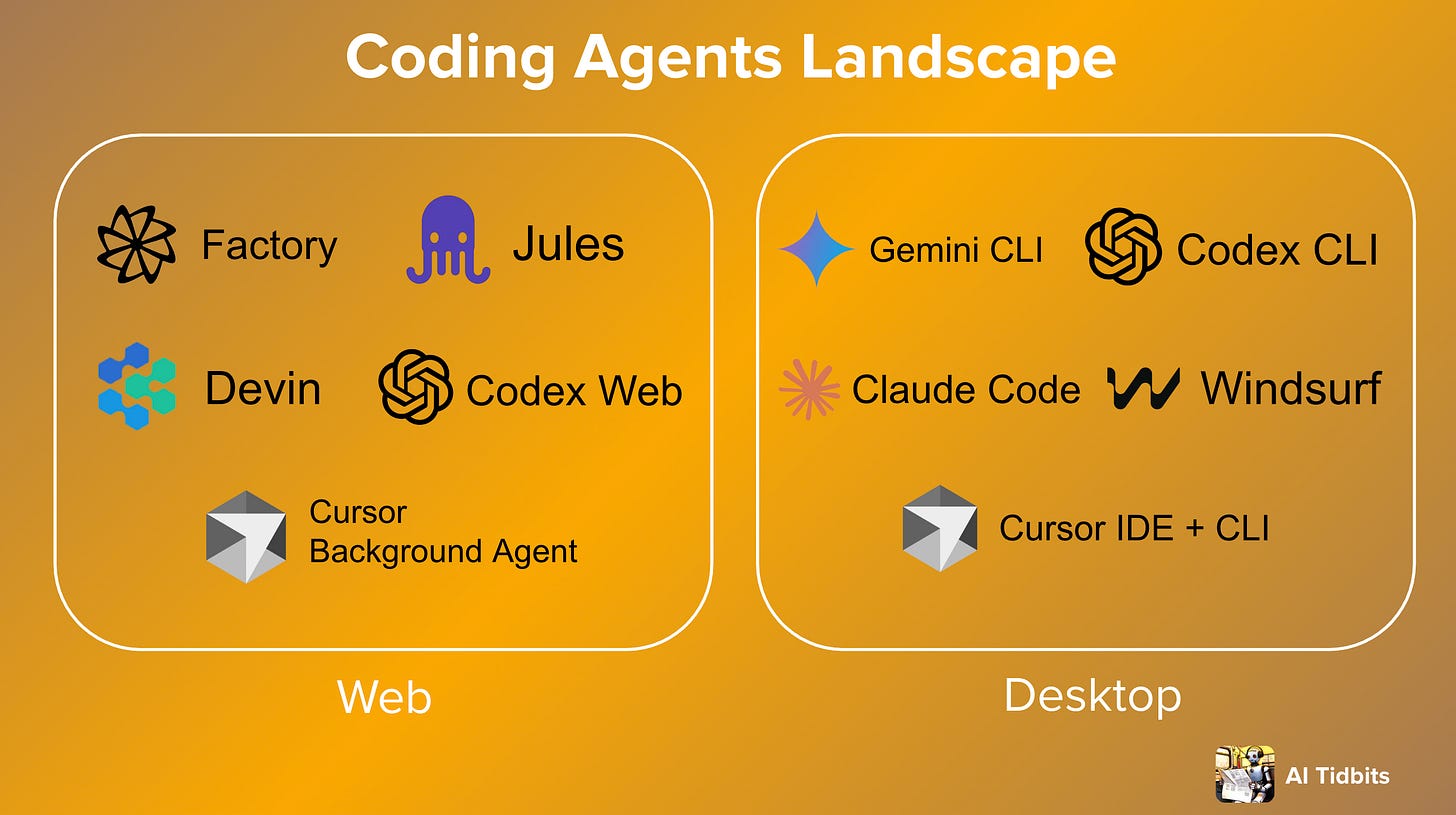

In this post, I break down the shift from desktop to cloud-based coding agents, exploring what makes them different, how they fit into real-world development workflows, and where each leading tool stands today. Whether you’re exploring Devin, Codex, Jules, Factory, or Cursor Background Agents, this guide will help you understand how they work, their strengths and trade-offs, and how to get the most out of them.

A NotebookLM-powered video podcast summarizing this post

Keep exploring this post:

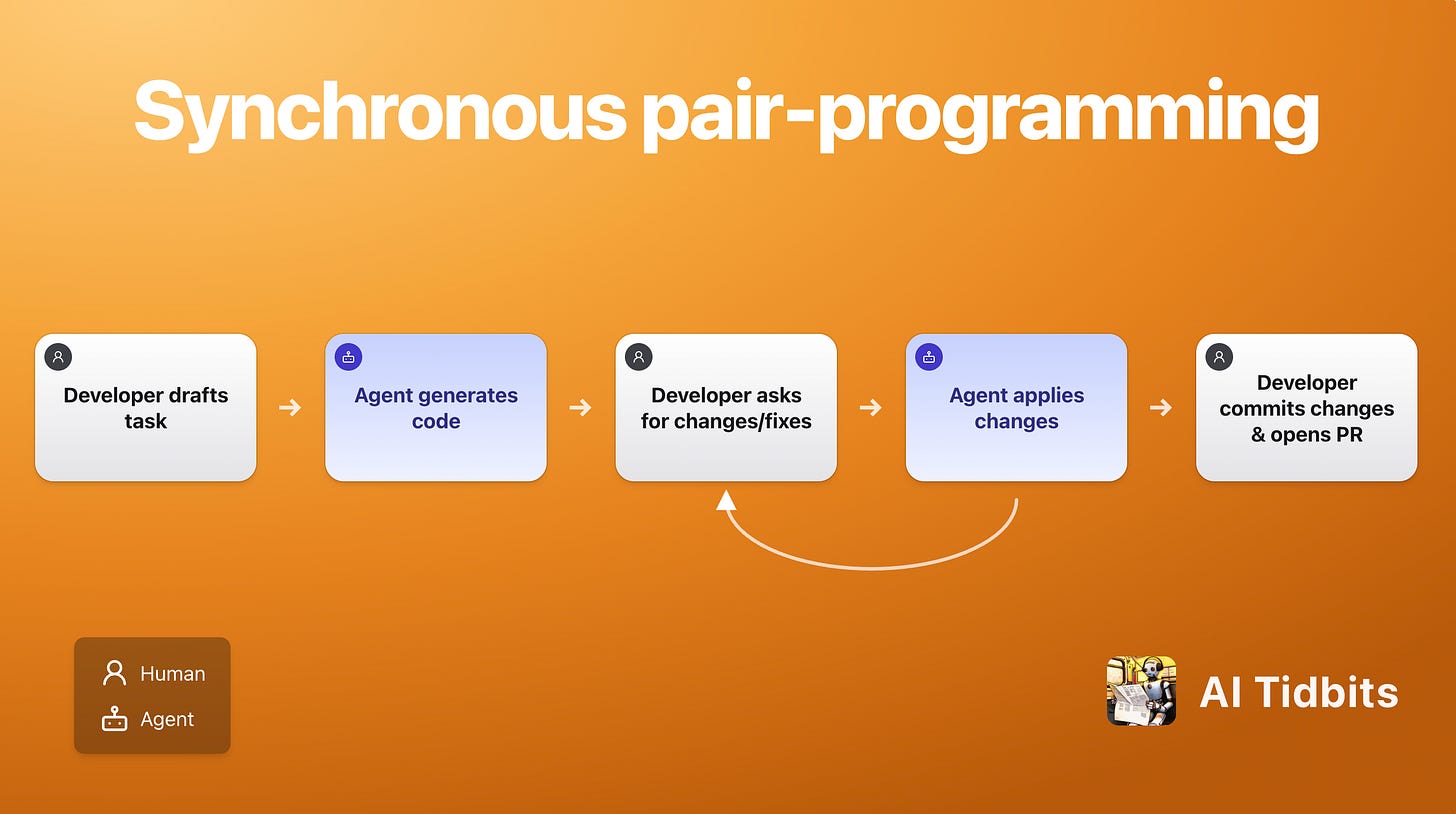

Agent-assisted coding is evolving quickly. Tools like Cursor, Windsurf, and Claude Code are already part of many developers’ workflows. These desktop agents run locally and rely on continuous back-and-forth: Developer drafts a coding task prompt → Coding agent generates code → Developer asks for changes/fixes → Coding agent implements change → You commit local changes as part of a pull request.

This pair-programming style boosts productivity, but it doesn’t scale. The interaction is synchronous: you must constantly steer the agent from the initial coding task prompt to creating a pull request. Running multiple coding agents in parallel feels like managing multiple junior developers simultaneously.

Real-world engineering teams work differently.

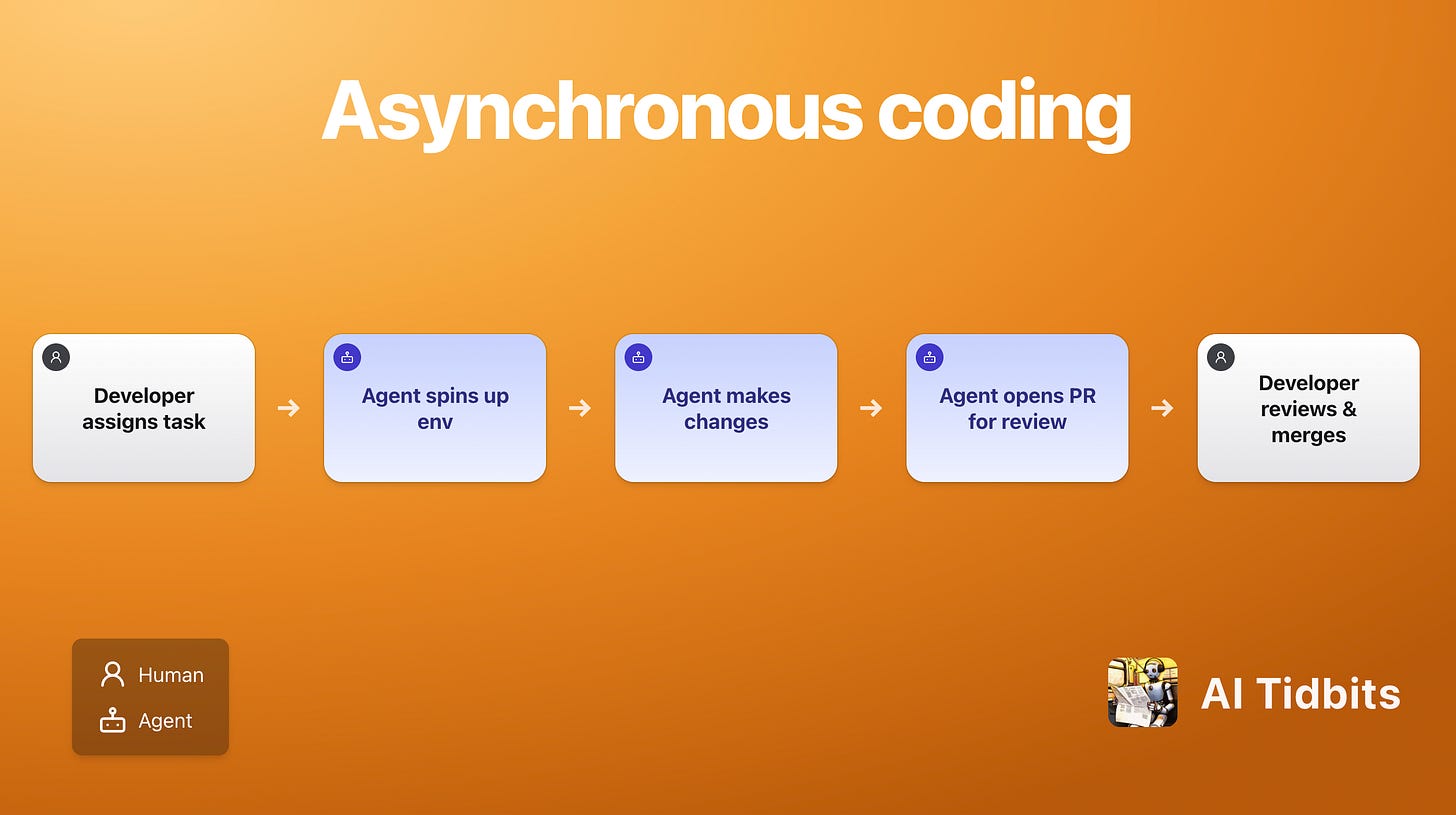

Enter cloud agents, asynchronous coding agents that better resemble a dev on your team: You assign a task → Cloud agent spins up its own environment in the cloud (as if it had its own laptop) → Cloud agent makes changes → Cloud agent opens a pull request for you to review.

You can request and merge changes once the code meets your standards. Some even integrate with Slack and other collaboration tools such as Linear and GitHub, further streamlining the development and CD/CI cycles.

In 2025, the line between desktop and cloud agents is blurring. Cognition, the creator of Devin, the web-managed cloud agent, acquired Windsurf, a Cursor-like IDE that acts as a desktop agent. Cursor, on the other hand, now offers background agents that run asynchronously both locally and on the web. Factory AI (cloud agent) offers a downloadable bridge that enables asynchronous workflows in local environments. Google’s Jules (cloud agent) just graduated out of Beta to complement Gemini desktop CLI (desktop agent), mirroring OpenAI’s Codex (web) and Codex CLI (desktop) approach.

The path for coding alongside AI is set: as models and tooling improve and best practices solidify, coding agents are shifting to asynchronous-first workflows. To clarify, autonomy isn’t a “web” feature, it’s an agent capability. It just so happens that, today, most fully autonomous agents are delivered as web-based tools.

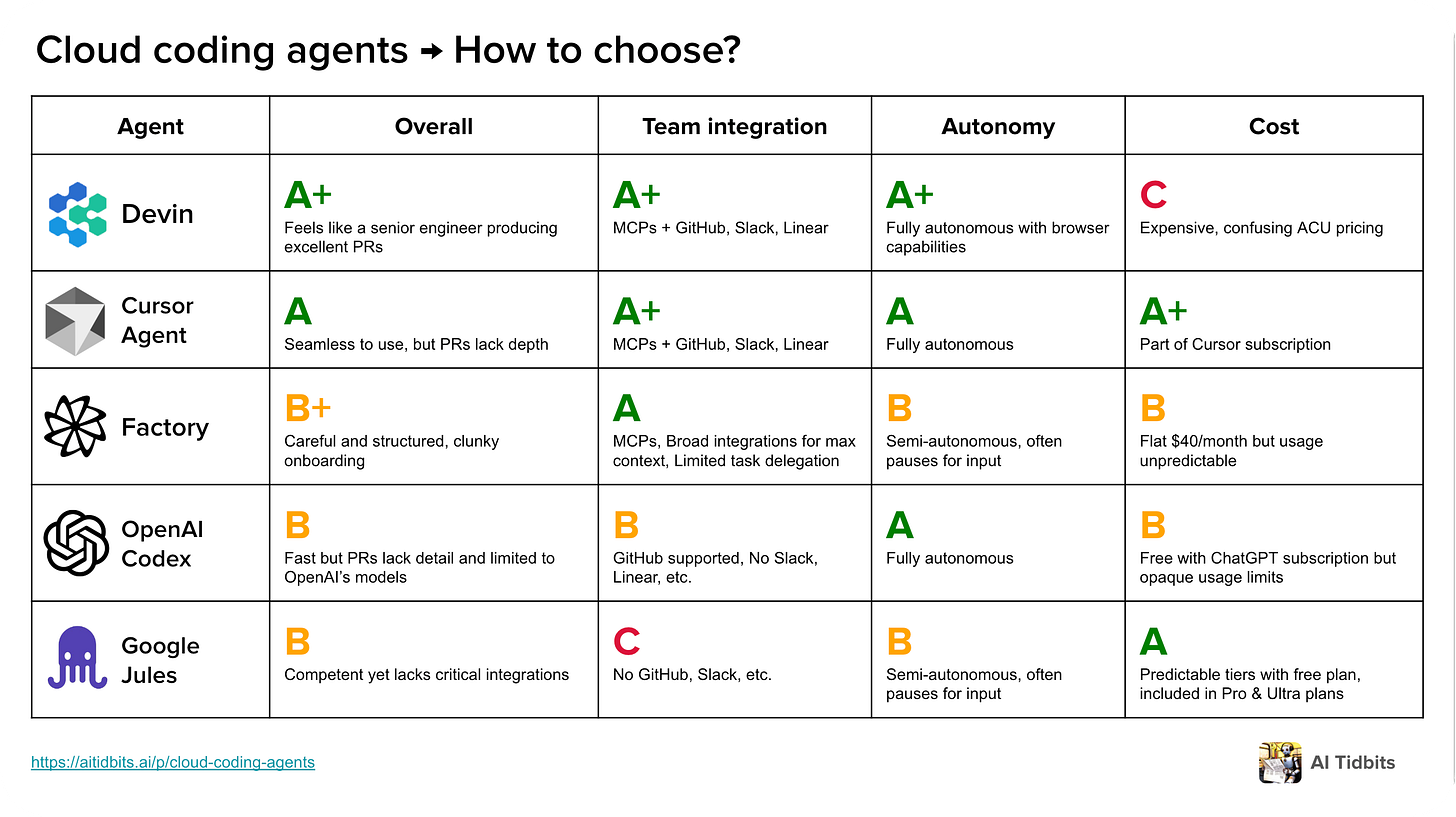

In this post, I’ll walk you through what it’s like to work with each of the leading cloud agents, including a screen recording of my workflow so you can see how the interfaces look and behave in practice. Whether you’re curious about what these agents can actually do or trying to figure out which one fits best into your development workflow, this guide is for you. I’ve also included a comparison table at the end that makes clear which tools truly stand out.

Evaluation framework

Each agent was evaluated across four criteria:

Overall experience - onboarding flow, coding UX, working process smoothness (planning → execution → testing), and pull request clarity.

Team integration - how well the agent fits into real workflows: taking tasks, opening solid pull requests, addressing feedback, and communicating through platforms like Slack.

Autonomy - the level of independence from assignment to pull request: does the agent require step-by-step guidance and close supervision, or can it deliver end-to-end?

Cost - pricing model and the actual cost of completing the benchmark task.

To evaluate the agents, I gave each one the same benchmark assignment: add recurring task support to a lightweight to-do app repository:

Add support for recurring tasks. Users should be able to pick from daily, weekly, or monthly recurrence options when creating or editing a task. When a recurring task is marked complete, create the next occurrence immediately with the due date shifted by the chosen interval. Keep changes simple.I deliberately chose a more straightforward task that all agents naturally completed successfully. The goal of this post is not to benchmark their performance, but to evaluate the experience of working with them. In future posts, I plan to conduct more complex evaluations to compare these agents on challenging, real-world tasks.

Become a premium member to access the full LLM Builders series, $1k in free credits for leading AI tools and APIs (Claude, Hugging Face, Deepgram), and editorial deep dives covering AI coding and voice agents. It's also a great way to show your support :)

Many readers expense the paid membership from their learning and development education stipend.The results

Devin

Overall experience

Setup took minutes: sign in, connect GitHub, and Devin is ready to go. It scanned the codebase, created a confident plan, executed the task, and opened a well-structured pull request, all autonomously.

The experience felt like pair programming with a senior engineer: you see the shell (the command line for running code), VS Code (where code is edited), and a browser (for testing), all updating in real-time.

The pull request included a clear summary, test plan, and even a diagram, making review easy.

Devin handled feedback directly through GitHub, just like a real teammate. It felt like collaborating with someone who not only ships quality code, but also knows how to get it merged.

Team integration

Devin slots naturally into team workflows. On GitHub, you can review its code or ask it questions exactly as you would with a colleague. It also integrates with Slack, Linear, and Jira, allowing you to tag it in a thread or assign it to an issue.

Devin can also connect to MCP servers, enabling seamless connections to external tools and internal systems. Through its MCP server, Devin can pull in structured context from documentation, analytics, and monitoring platforms like Notion, Sentry, and Datadog. This makes it easier for Devin to act with deeper awareness of your infrastructure and business logic.

Autonomy

Devin is fully autonomous: once you assign a task, it produces a pull request without further input. For web apps, it can even run and test the app itself. This autonomy is powerful because it allows you to run multiple coding agents that don’t require supervision. The downside is that it can go off-track if your prompt and intentions are vague, wasting time and tokens. Fortunately, Devin has substantially improved since the last time I tested it in December, making it autonomous and useful.

Cost

Devin’s pricing is structured in Agent Compute Units (ACUs). Those units represent the work done by Devin in a single session. Steps like planning, gathering context, running code, or using the browser all consume ACUs.

Each ACU costs $2.25. My benchmark task used 3 ACUs, which comes to about $6.75. That’s steep for a simple job. This novel ACU model also introduces friction. Since no other coding agent uses it, there's no mental benchmark, making it harder for developers to estimate costs. The lack of transparency creates hesitation that hinders adoption, especially when simpler pricing models are the norm.

How to get the most out of Devin

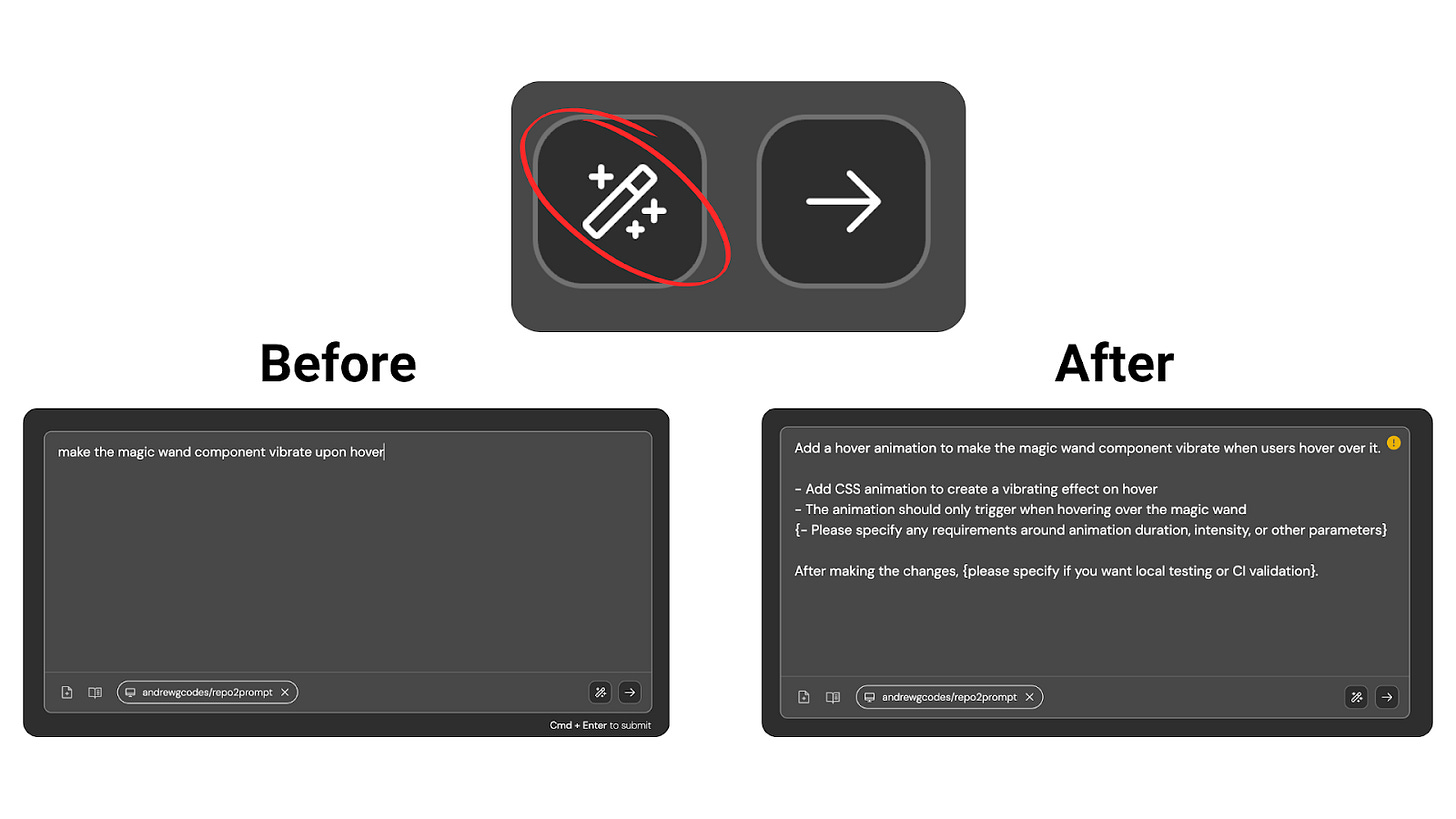

(1) The Prompt Improvement Button

Devin has a built-in prompt improver that refines your instructions before it starts. Running prompts through it clarifies intent and removes ambiguity, which helps Devin produce more accurate, review-ready pull requests.

(2) Leverage Devin’s Knowledge capability

Knowledge lets you onboard Devin with your project’s context, just like you’d ramp up a new engineer. It serves the same purpose as coding agents context files such as cursor.md or AGENTS.md, but with structured triggers built in.

Add information in small pieces, group it in folders, and link it to repositories with triggers so Devin knows when to apply it. Store anything you would want an engineer in your team to know: coding standards, workflows, deployment steps, bug fixes, etc.. Once added, Devin recalls and applies it automatically. More tips covering Knowledge here.

(3) Devin Playbooks, à la Claude Subagents

Playbooks are reusable prompts for recurring tasks. Instead of re-explaining a process to Devin every time, create a playbook and ask Devin to use it. It’s like showing a teammate how to do something once and having them write it down so they never ask again.

(4) Connect Devin to Slack/Linear/Jira

Plug Devin into your team’s task management workflow: Assign it issues or tag it in threads. It will pick up the task immediately and get to work. That is especially useful when on the road, as you can tag Devin in a Slack conversation and ask it to take a first (and last?) pass at fixing a bug or implementing a feature.

Recent posts on coding better with AI

OpenAI Codex

Overall experience

Codex is built into ChatGPT, so setup is quick: load your repo, assign a task, and it gets to work. It scans the code, plans, runs tests, and opens a pull request. Also, as of last week, Codex can run in your IDE of choice as an extension, supporting Windsurf, VS Code, and Cursor. You can use it locally or delegate tasks to the cloud.

The interface is minimal, with a shell view and collapsible logs. When complete, Codex provides a concise summary with direct code references to expedite the review process.

Team integration

Codex doesn’t play well with existing tools such as Slack, Linear, and Jira, which is a major downside given that many teams use Slack to discuss and assign work, and GitHub is the familiar interface for code reviews.

Autonomy

Codex is fully autonomous: once assigned a task, it works in its isolated cloud environment and delivers a complete pull request within minutes without requiring further prompting. Similar to Devin, that could be a neat feat if the agent is capable enough, but dangerous if vague coding tasks lead to poor generated code. For our simple task, Codex performed well enough.

Cost

Codex is included with ChatGPT Plus or Pro, so there is no extra charge beyond your subscription. That said, usage limits are not publicly disclosed, which can be a red flag for many teams that require a reliable coding agent with transparent rate limits.

How to get the most out of Codex

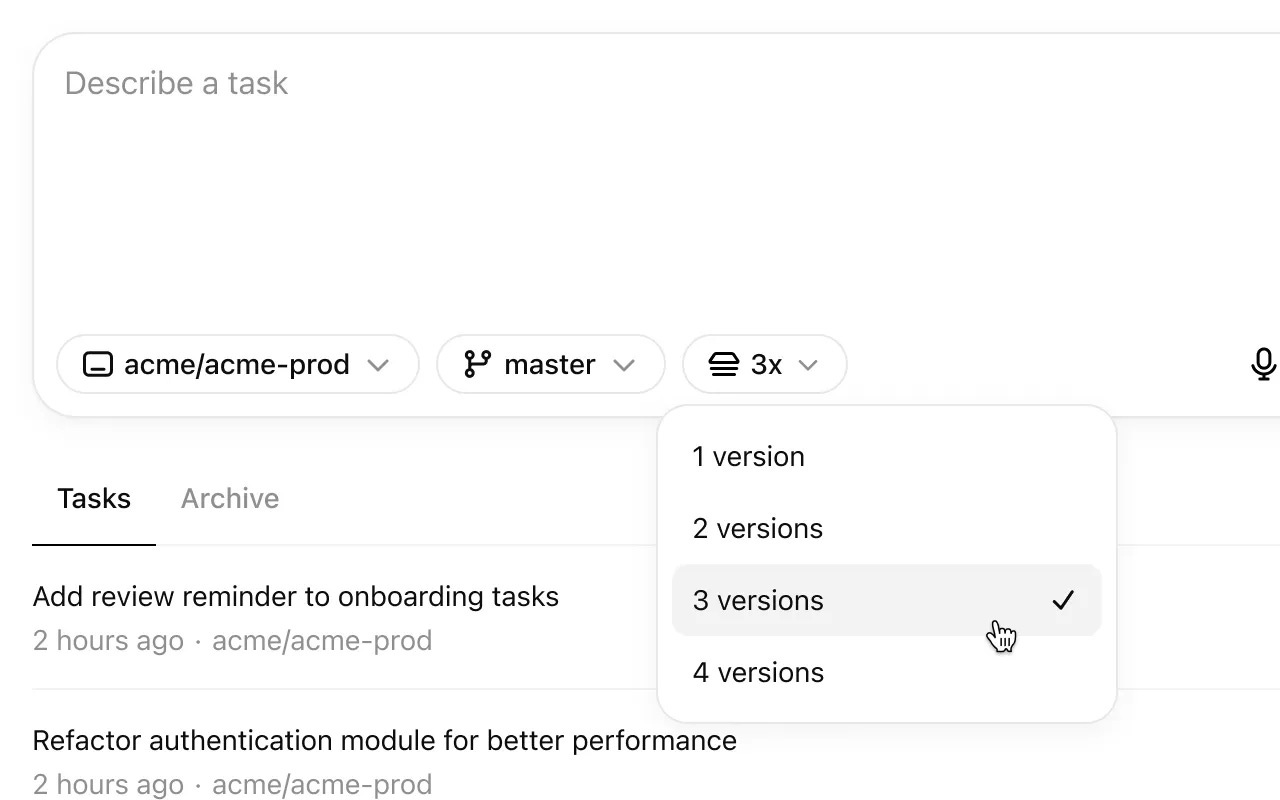

(1) Generate multiple responses simultaneously, choose the best one

Codex’s Best of N feature allows you to generate several independent solutions in parallel for the same task. This lets you quickly explore different approaches and select the one that best fits your needs, without adding time to your workflow.

(2) Enable internet access when needed

By default, Codex runs offline after setup, so it cannot look up documentation or install new packages. Enabling internet access solves this issue, but it should only be used when the task requires it, as it carries security risks. For instance, an attacker could slip in a malicious command that Codex executes, unintentionally leaking sensitive data.

(3) Tag Codex in pull requests

Tag Codex for questions or reviews via @codex <question> or @codex review. You can also tag it for changes, but that spawns a new task with a separate pull request you’ll need to merge back into the original.

Google Jules

Overall experience

Setup was quick: I signed in with Google, linked GitHub, and Jules was ready. I provided the task and it then scanned the codebase, generated a detailed plan, and executed it step by step until a pull request was opened. The interface, structured around the plan, made progress easy to follow with expandable diffs, similar to Claude Code’s and Cursor’s recently released planning feature.

However, the pull request’s description was lacking. For example, it stated “This change adds support for recurring tasks…”, which reads more like a product note than a proper description of a technical feature. I expected at least one section covering the technical components added/changes to facilitate a more efficient and easier review. Code review also felt unnatural. Similar to Codex, I couldn’t review the pull request as I would on GitHub: all reviews had to happen in Jules, where I could only leave a single block of feedback instead of commenting on individual lines. While the grouping of changes by the plan’s action items in the interface made diffs easy to follow, the process was rigid compared to reviewing a colleague’s PR.

Team integration

Jules does not fit smoothly into team workflows. On GitHub, you cannot review its code or collaborate with it the way you would with another developer. It also lacks integrations with tools like Slack, Linear, or Jira, leaving its interface as the primary means of interaction. As a result, Jules feels separate from the normal channels teams rely on to collaborate.

Autonomy

Jules is semi-autonomous. It generates an implementation plan that you approve in the beginning. If you don’t respond, the plan auto-approves and executes, completing the task end-to-end without requiring your input, unless it encounters roadblocks or needs clarifications, similar to how Claude Code prompts the user for input.

Cost

Jules is part of Google’s AI package. It offers the following tiers:

Free tier - 15 tasks per day, 3 concurrent tasks

Pro - 100 tasks per day, 15 concurrent tasks

Ultra - 300 tasks per day, 60 concurrent tasks

The free tier makes it easy to get started. The pricing model is transparent and predictable, as it remains consistent regardless of task complexity and does not depend on unpredictable factors. For developers, this makes Jules straightforward to adopt and scale.

How to get the most out of Jules

(1) Interactive Plan mode

Start tasks using the Interactive Plan mode. Jules will then scan the codebase, ask clarifying questions, and create an implementation plan you’d approve before execution. This prevents ambiguity and ensures the output matches your intent, and is already the way I code nowadays. Feels like a junior developer confirming requirements before writing code.

(2) Configure environment setup

If a repo requires setup commands such as dependencies or environment variables, define them upfront. Jules will run them automatically when working with that repo, keeping environments consistent.

Factory AI

Overall experience

Factory is built around the concept of “Droids”: autonomous agents purpose-built for different roles across the software development lifecycle (Code, Reliability, Knowledge, Product). Each Droid comes with its own architecture, memory, and tools, and can operate independently or in parallel. Unlike traditional coding assistants, Droids are designed to own and execute tasks end-to-end, making Factory feel more like a full-stack engineering team than a co-pilot, similar to the idea behind Claude Code's Subsagents.

When using Factory, it’s clear that the company is focused on enterprise users, rather than indie developers, which could hinder the organic bottom-up growth that Claude Code and Cursor benefit from. For instance, you must enter credit card details for a trial with no way to pre-cancel. Pricing is also somewhat opaque and tied to token usage: $40 for 20 million tokens.

Factory’s most significant drawback, however, is the indexing process. For our small benchmark repository, indexing took anywhere from several minutes to multiple hours. That’s a considerable delay just to get started. In a developer world optimized for instant feedback loops and low-friction experimentation, this delay feels off.

On the other hand, Factory shines in its coding cycle: it builds a clear to-do list, asks intelligent and clarifying questions, and executes with visible reasoning and file-by-file diffs. The pull request is detailed and reads like something a senior engineer would write. It also provides the broadest set of integrations, allowing you to consume relevant context from popular tools such as Notion, Google Drive, and even incident management tools like Sentry and PagerDuty.

Additionally, to Factory’s credit, user experience appears to have become a recent priority. When I first used it back in March, the interface was cluttered and overwhelming: too many panes, not enough clarity. During my coding session for this article, however, the redesign was substantially better: the layout is cleaner, more focused, and thoughtfully organized around the key decisions and information needed at each stage.

Team integration

Integrations is where Factory differentiates itself, showcasing again its enterprise focus. It can consume context from your team’s internal knowledge systems: Slack, Linear, Jira, Notion, Google Drive, Sentry, and PagerDuty. Such integrations infuse the coding agent with context beyond the project’s repository, which improves the agent’s performance.

These integrations allow Factory to enrich its understanding of your engineering workflows far beyond what's available in the repository. However, these integrations are read-only: Factory can ingest context from these platforms but cannot be actively directed through them. You can’t, for example, tag Factory in a Slack thread or assign it a Linear ticket and expect it to take action, unlike agents like Devin.

Cost

Factory offers a 14-day trial, after which pricing starts at $40 per month for 20 million tokens. Our task consumed ~330k tokens. As a full-time engineer, you’ll likely burn through this quota quickly–our simple feature alone ate through over 1.5% of the monthly allowance.

Autonomy

Factory is semi-autonomous and often requires your input. It avoids making assumptions, it often asks clarifying questions before starting, and seeks approval before taking actions, such as creating a pull request. By default, it will not create branches or commit files without your approval, though you can disable this safeguard.

How to get the most out of Factory

(1) Use Factory Bridge

Bridge is a secure connector that links Factory's cloud platform to your local machine. It enables running CLI commands, managing local processes, and accessing local files directly from Factory sessions. Tip: use it within an isolated environment (e.g., Docker) for safe and optimal results. I used Bridge when I couldn’t access my laptop or wanted to split tasks between Factory and Claude Code, saving Factory tokens while running both agents in parallel.

(2) Use a remote machine

When local access is not needed, connect Factory to a remote machine. It still grants full command and workflow access without requiring you to touch your computer.

Cursor Background Agent

Overall Experience

Getting started is easy for existing Cursor users: simply submit a task and select “Send to Background”. On the web, log in and paste your coding prompt.

You can watch the agent’s progress by connecting to its virtual cloud environment (“Open VM”), which mirrors a live coding session. Once complete, you get a change summary and file diffs in both desktop and web.

The pull request description is barebones, often limited to a single sentence. It lacks a detailed context that would streamline reviews. Code review, however, feels seamless: you can comment directly on GitHub, tag the agent, and get a response in-line, just like working with a human teammate.

Team integration

Cursor integrates directly into Slack and Linear, so you can tag it in threads or assign it to issues.

Autonomy

Cursor’s Background version is fully autonomous. Once you assign a task, it analyzes the codebase, executes the plan, and opens a pull request without requiring user input.

Cost

Background agents are billed at the API rate of the model you choose, which is affordable with models like gpt-5-high. The task I ran came at 8¢. Token-based pricing is inherently unpredictable, but that’s already the norm with the popular Claude Code and Cursor desktop agents.

How to get the most out of Cursor Background Agent

(1) Add a .cursorrules file to your repositories

Cursor’s best practices also apply to background agents. Make sure every repository you use includes a .cursorrules file, just as you would when working locally (you can use my recently released tool to generate one).

(2) Connect Cursor to Slack or Linear

If your team uses either tool, integrate Cursor so you can assign it issues or tag it in live discussions. Whether it is a bug in Slack or a ticket in Linear, the agent can pick it up immediately and start working.

More tips here https://www.aitidbits.ai/p/sahar-ai-coding

Choosing the right coding agent

We’re just getting started with coding agents

All of these tools are still in their early stages. We can expect them to evolve quickly—I had to update my post twice over the span of two weeks due to new releases.

Just as importantly, the paradigm of working with autonomous agents is still taking shape. Tools like Claude Code’s Subagents and newcomers like Conductor and Task Master hint at what’s to come. In future posts, I’ll dive deeper into each of the tools reviewed here. Subscribe to follow along as I learn how to collaborate with this new generation of coding agents.

Asynchronous task execution is where the magic happens. Wiz built and deployed 4 apps in one night while I slept. Cost: ~$15 API calls.

The shift from "coding assistant" to "autonomous developer" is real but messy. What nobody tells you: 80% of the work is error handling, not happy-path execution.

Your mention of team integration resonates. I had to build explicit escalation rules: "You can modify dev, prod needs approval." Without boundaries, autonomy becomes chaos.

Interesting: Wiz is now trying to pay for itself by building digital products. Revenue so far: $0. But the attempt is fascinating—agent-driven product discovery and iteration without human input.

Full writeup: https://thoughts.jock.pl/p/my-ai-agent-works-night-shifts-builds

Curious how you see monetization working at scale.