LinkedIn Highlights, May 2025 - AI Coding Edition

Six practical tips for coding with AI: from agent workflows to Claude hacks, plus a bonus tip to cut Claude API costs and latency

Welcome to LinkedIn Highlights!

Each month, I'll share my five seven top-performing LinkedIn posts, bringing you the best of AI straight from the frontlines of academia and industry. This edition includes seven posts instead of five—there were just too many good ones to leave out!

As a frequent LinkedIn contributor, I regularly share insights on groundbreaking papers, promising open-source packages, and significant AI product launches. These posts offer more depth and detail than our weekly snippets, providing a comprehensive look at the latest AI developments.

Over the past month, I’ve been deep in the weeds of coding with AI: tinkering, prototyping, and writing about how coding with LLMs can go beyond vibe coding, making us engineers substantially more productive. This post covers six of my most popular LinkedIn posts on the topic, each packed with practical takeaways for both aspiring and experienced engineers. Plus, a bonus tip at the end: an underrated trick from Anthropic to reduce cost by 90% and latency by 50%.

Whether you're not on LinkedIn or simply missed a post, this monthly roundup ensures you stay informed about the most impactful AI news and innovations.

Post(s) published this month:

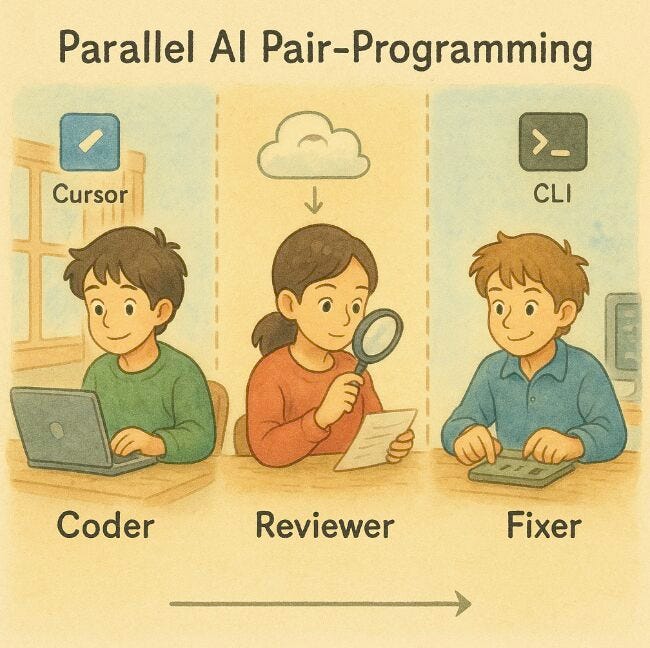

(1) Parallel AI pair-programming

Recently, I adopted a coding tip from the Anthropic team that has significantly boosted the quality of my AI-generated code.

Anthropic runs multiple Claude instances in parallel to dramatically improve code quality compared to single-instance workflows.

How it works:

One Claude writes the code, the coder - focusing purely on implementation

A second Claude reviews it, the reviewer - examining with fresh context, free from implementation bias

A third Claude applies fixes, the fixer - integrating feedback without defensiveness

This technique works with any AI assistant, not just Claude. Spin each agent up in its own tab—Cursor, Windsurf, or plain CLI. Then, let Git commits serve as the hand-off protocol.

This separation mimics human pair programming but supercharges it with AI speed. When a single AI handles everything, blind spots emerge naturally. Multiple instances create a system of checks and balances that catch what monolithic workflows miss.

This shows that context separation matters. By giving each AI a distinct role with clean context boundaries, you essentially create specialized AI engineers, each bringing a unique perspective to the problem.

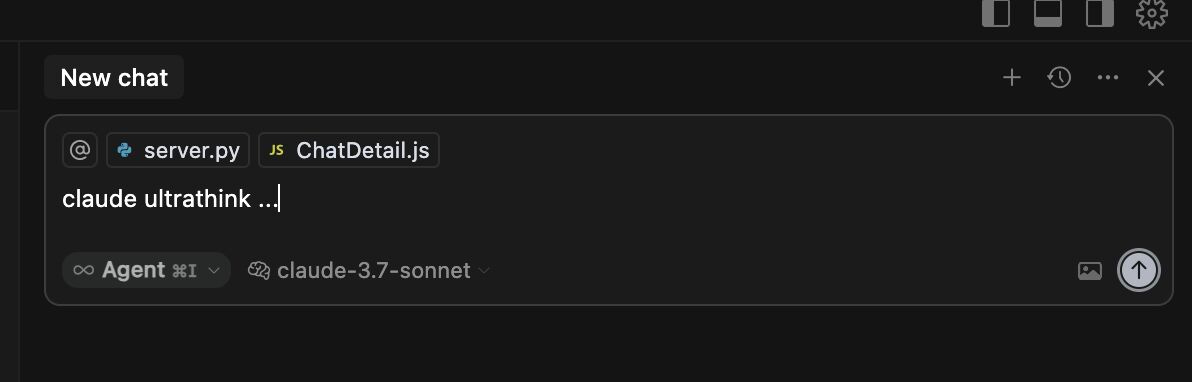

(2) Claude ultra think

One extra word can unlock Claude’s hidden reasoning budget and give developers building with AI the planning superpowers.

According to Anthropic, Claude allocates compute tiers based on trigger words:

think < think hard < think harder < ultrathink.

Drop any of these at the start of a prompt and the model gives itself more “brain cycles” before answering. Perfect for discussions on architecture, API design, or edge-case analysis. I also use it when debugging an issue that the existing models (Gemini, o1) didn’t manage to solve.

It works in Cursor/Windsurf and the Claude web chat; no flags, no config.

Give it a try: open your next coding session, preface the prompt with 'think harder' or 'ultrathink' and see how Claude’s performance improves.

My recent post on coding with AI:

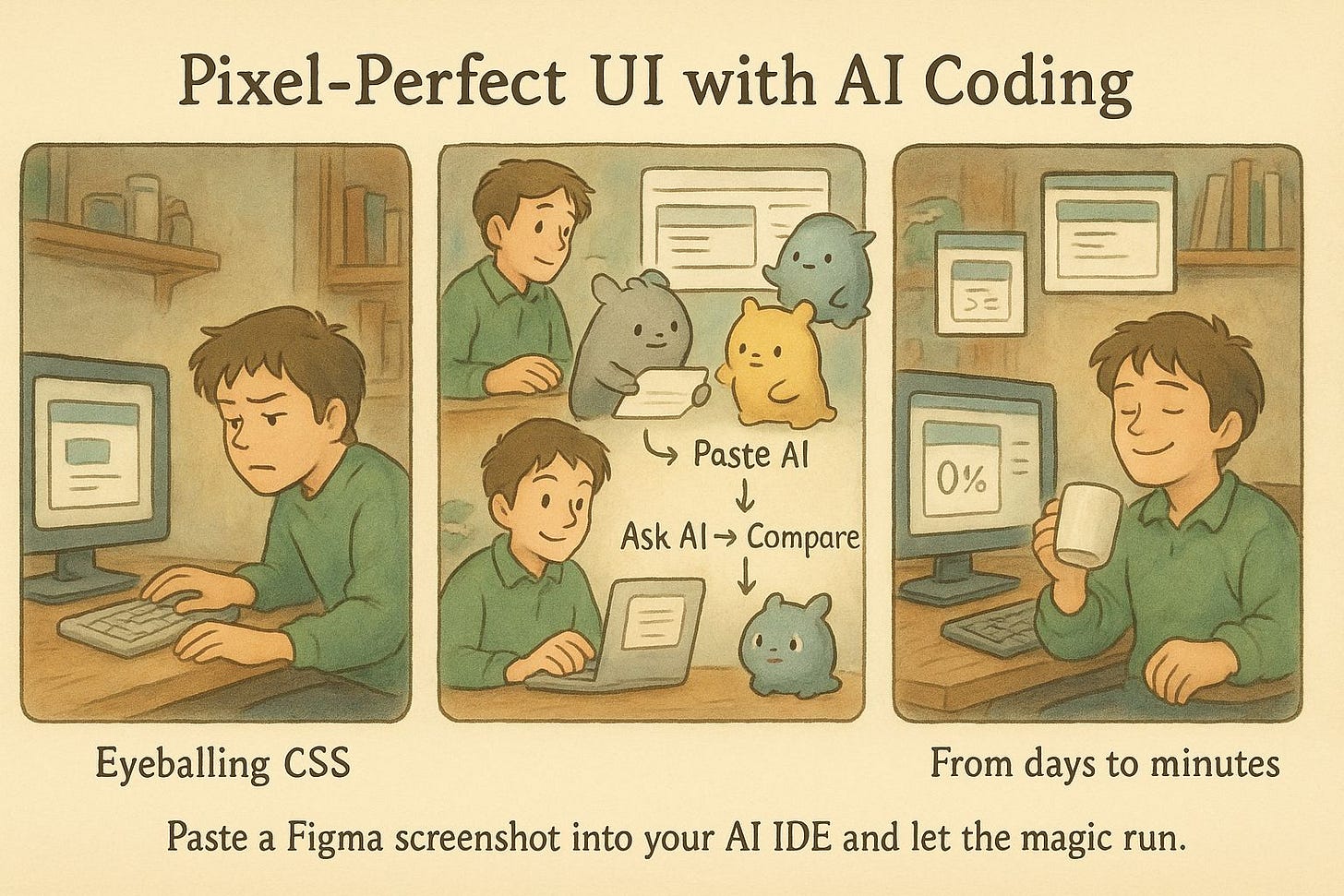

(3) Coding visual interfaces with coding agents

Most devs still eyeball CSS tweaks while it’s possible to achieve pixel-perfect UI with coding models like Claude and o3.

Screenshot-driven iteration works across o3, Gemini, and Claude (I’ve had the best luck with Claude). Process:

(1) Paste the design mock via clipboard, drag-drop, or file path

(2) Ask the model: “Implement this layout, screenshot the result, and compare it to the mock. Repeat until they match.”

(3) Watch it code → screenshot → diff → refine until the visual diff hits zero

Design-to-dev handoff shrinks from days to minutes. Frontend teams can A/B three layout variants before lunch, and PMs can solo build UI prototypes without opening Figma.

Try it: paste a screenshot/Figma design with the above prompt in your AI IDE of choice and let the loop run.

(4) Auto-generated MCP servers

One trick I often use and not many other AI builders know: you can now spin up an MCP server for almost any Mintlify-powered API with just two commands.

MCP (Model Context Protocol) servers are the missing layer that enables AI coding assistants like Cursor and Windsurf to generate reliable programs that interact with APIs through natural language, without needing manual integrations or risking hallucinated parameters and broken function calls.

Traditionally, setting up an MCP server meant custom-building one for each API. A tedious, manual process. Now, thanks to Mintlify’s new package, it’s effortless:

npm i mcp

npx mcp add [api subdomain] (for example, docs.bland.ai)

That’s it. The API you’re trying to work with becomes an MCP server. No custom code, no extra work.

You can test it with the Mintlify-powered Bland API docs to initiate phone calls programmatically.

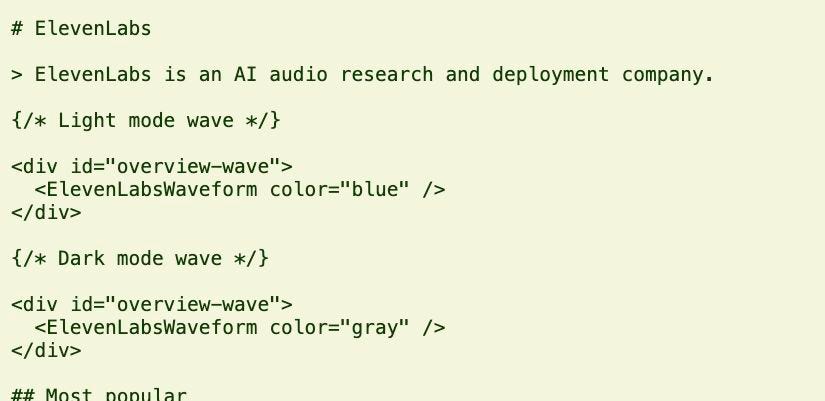

(5) llms.txt

Most developers still use LLMs to write isolated functions. However, the real power of AI coding lies in making coding models interact reliably with APIs.

That’s where llms.txt comes in.

Inspired by robots.txt, llms.txt is a simple, standardized Markdown file that describes how a website or API works. It resides at the root of a site and distills documentation, endpoints, authentication flows, and usage examples into clear, predictable text. LLMs skip the cumbersome scraping, read the file, and instantly know how to call your API.

Why this matters:

Today, prompting an LLM to use an API is messy. Developers must manually write tool descriptions, guess parameter formats, and hope the model figures it out.

No HTML parsing or rate-limited from crawling websites

llms.txt provides a structured approach to eliminate guesswork and unlock a new era of API-driven AI coding.

To make it even easier, there’s now a full llms.txt directory, listing APIs and websites that already adopted the standard, including Anthropic, Eleven Labs, and Hugging Face https://directory.llmstxt.cloud

And if your website doesn’t have one yet, you can auto-generate it with Firecrawl's new llms.txt Generator API https://docs.firecrawl.dev/features/alpha/llmstxt

𝘞𝘢𝘪𝘵, 𝘪𝘴𝘯’𝘵 𝘵𝘩𝘢𝘵 𝘴𝘪𝘮𝘪𝘭𝘢𝘳 𝘵𝘰 𝘔𝘊𝘗𝘴? 𝘞𝘩𝘦𝘯 𝘵𝘰 𝘶𝘴𝘦 𝘵𝘩𝘢𝘵 𝘰𝘷𝘦𝘳 𝘔𝘊𝘗?

If you want AI agents to know how your API works and generate correct code, use llms.txt. If you want agents to use an API, set up an MCP server. Think of llms.txt as the knowledge base for AI coding, and MCP as the execution layer for live interactions.

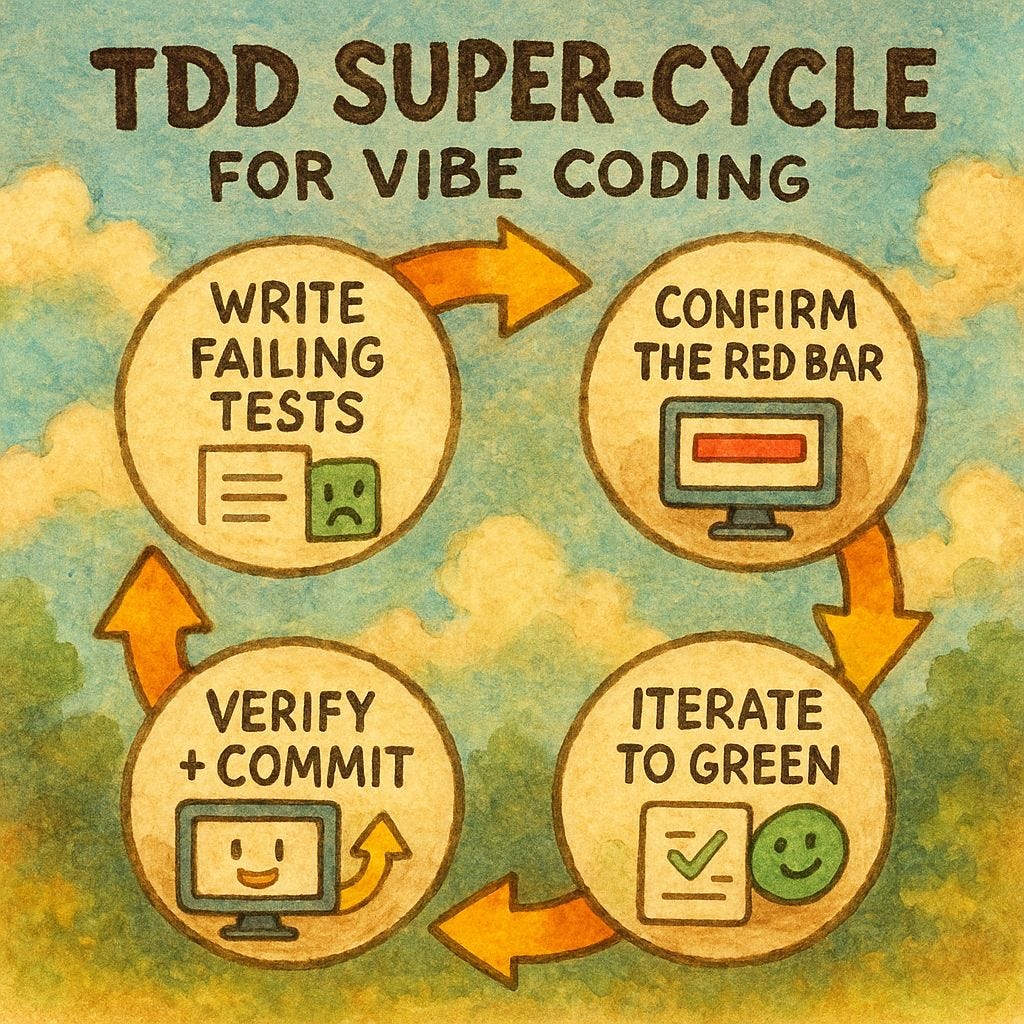

(6) Test-Driven Development with AI

Keep reading with a 7-day free trial

Subscribe to AI Tidbits to keep reading this post and get 7 days of free access to the full post archives.