May 2024 - AI Tidbits Monthly Roundup

A 2M context window from Google, an any-to-any model from OpenAI, new open models for document understanding, Mistral’s first coding LLM, and a sneak peek into LM’s brain from Anthropic

Welcome to the monthly curated round-up, where we curate the firehose of AI research papers and tools so you won’t have to. If you're pressed for time and can only catch one AI Tidbits edition, this is the one to read—featuring the absolute must-knows.

Welcome to the May edition of AI Tidbits Monthly, where we unravel the latest and greatest in AI. This month has been particularly eventful, with major updates from industry leaders such as Google, OpenAI, and Microsoft.

In its Spring Updates event, OpenAI introduced GPT-4o, a multimodal model processing text, vision, and audio with real-time emotion recognition and adaptive speech responses. They expanded the free tier to include ChatGPT Plus features and announced a new Mac app, with a Windows version coming soon.

Google I/O was also filled with announcements, including a Gemini 1.5 Pro with featuring a 2M-token context window and Gemini 1.5 Flash, optimized for speed and cost-efficiency. Google unveiled Project Astra, a real-time multimodal AI assistant, and Veo, a long-form video generator, and announced the Trillium chip (TPU v6) for AI datacenters.

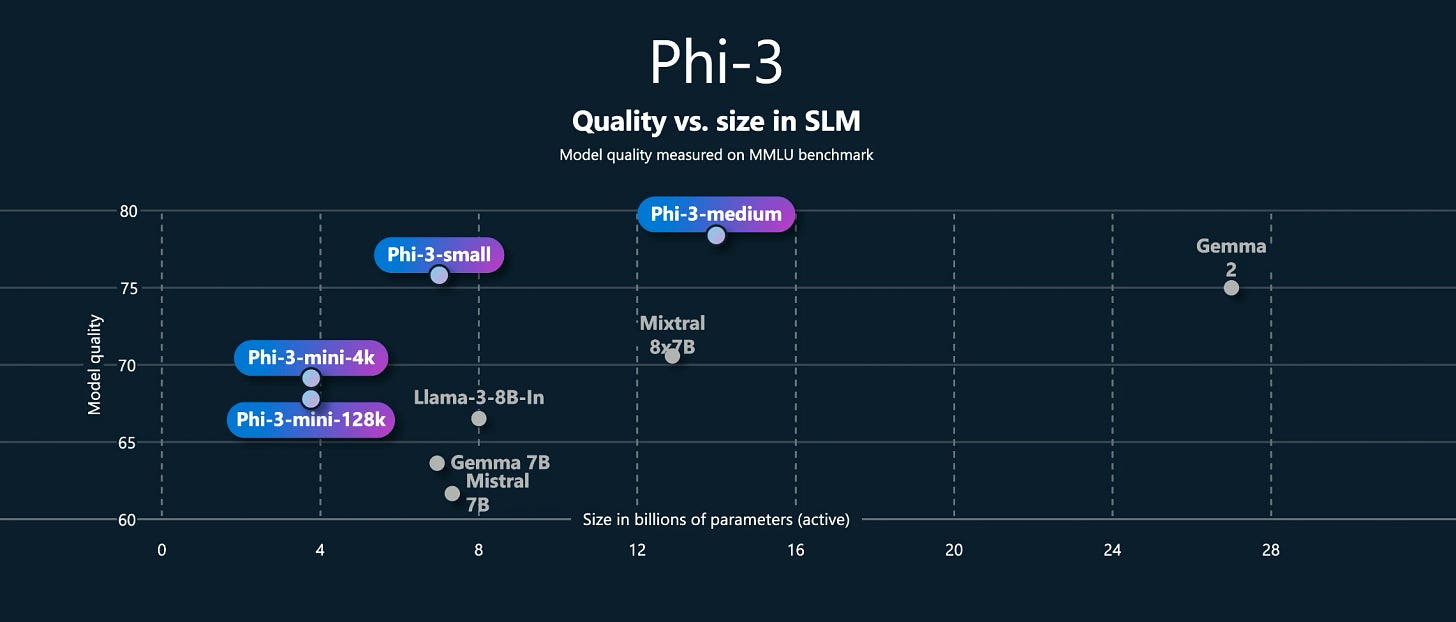

Lastly, Microsoft held its annual developers conference, Microsoft Build, introducing Copilot+ PCs, AI-optimized devices with advanced silicon and all-day battery life. They launched agents as part of Copilot to complete tasks autonomously, unveiled Phi-3 Vision and Phi Silica models, and announced AI-powered real-time video translation for the Edge browser.

In addition to these highlights, May's roundup includes groundbreaking advancements in language models (a new coding model from Mistral!), research, and open-source projects.

Let's dive in!

Overview

✨ Special feature: AI updates from Google, OpenAI, and Microsoft

Large Language Models

Open-source (10 entries)

Research (8 entries)

Autonomous Agents (2 entries)

Multimodal (5 entries)

Image and Video (5 entries)

Audio (4 entries)

Open-source Packages (6 entries)

Recent Deep Dives

Become a premium member to get full access to my content and $1k in free credits for leading AI tools and APIs like Perplexity, Replicate, and Hugging Face. It’s common to expense the paid membership from your company’s learning and development education stipend.✨ Special feature: AI updates from Google, OpenAI, and Microsoft

OpenAI Spring Updates

OpenAI introduced GPT-4o, a cutting-edge multimodal model that processes text, vision, and audio, offering superior speed and cost-efficiency compared to GPT-4 Turbo. Key enhancements include real-time emotion recognition and adaptive speech responses, inspired by the movie "Her." The new voice assistant features real-time translation, facial expression reading, and dynamic voice adaptation, significantly improving interactivity. OpenAI expanded its free tier, providing features previously exclusive to ChatGPT Plus users, and limited access to GPT-4o. Additionally, a new desktop app for Mac was announced, with a Windows version coming soon, and potential integration with Apple devices is on the horizon.

—> More here

Google I/O

At Google I/O 2024, Google unveiled Gemini 1.5 Pro, boasting an expanded context window of up to two million tokens, and Gemini 1.5 Flash, optimized for speed and cost-efficiency. New projects include Project Astra, a real-time multimodal AI assistant, and Veo, a long-form video generator. AI capabilities are being integrated across Google's ecosystem, enhancing Search, Gmail, Google Photos, and Android. The Trillium chip (TPU v6) was introduced, designed for AI datacenters to enhance processing power and energy efficiency, along with Gemini Nano, bringing on-device multimodal capabilities to Pixel devices.

—> More here

Microsoft Build

Microsoft introduced Copilot+ PCs, a new category of AI-optimized Windows devices with advanced silicon and all-day battery life. The company expanded Copilot AI agents to handle autonomous tasks, with new capabilities launching in Copilot Studio. Phi-3 Vision, a compact multimodal AI model, and Phi Silica, a local language model optimized for Copilot+ PCs, were unveiled. Additionally, the Edge browser will soon feature AI-powered real-time video translation, enabling multilingual video accessibility and enhancing global communication.

—> More here

Large Language Models (LLMs)

Open-source

Research

Keep reading with a 7-day free trial

Subscribe to AI Tidbits to keep reading this post and get 7 days of free access to the full post archives.

![[cross-post] 7 methods to secure LLM apps from prompt injections and jailbreaks](https://substackcdn.com/image/fetch/$s_!7JmR!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F9b82f8cc-62e9-4032-9fb5-5b643a6624ee_2256x1260.png)