AI Roundup 05/09 -> 05/16/2024

OpenAI's new any-to-any model that reasons over audio and video in real-time, Google's 2M tokens context window and a new Gemini-powered virtual AI teammate that completes work tasks

Welcome to the weekly edition of AI Tidbits, where I curate the firehose of AI research papers and tools every week so you won’t have to.

Overview

✨ Special feature: OpenAI Spring Updates

✨ Special feature: Google I/O 2024

Language Models (7 entries)

Vision (2 entries)

Audio (2 entries)

Open-source Packages (5 entries)

Recent Deep Dives

✨ Special feature: OpenAI Spring Updates

GPT-4o - a novel omni model

OpenAI unveiled GPT-4o, a multimodal model that processes text, vision, and audio. It's twice as fast and 50% cheaper than GPT-4 Turbo while outperforming it on benchmarks. GPT-4o can natively output in multiple modalities and boasts advanced features such as real-time emotion recognition and adaptive speech responses, drawing inspiration from the movie "Her."

Voice assistant enhancements

A new voice assistant feature was demonstrated, showcasing real-time translation, facial expression reading, and dynamic voice adaptation capabilities. The assistant can be interrupted and respond to a camera's visual inputs. These upgrades significantly enhance ChatGPT's interactivity, making it more expressive and versatile compared to its previous voice mode.

Expanded free tier features

Most of the features previously exclusive to ChatGPT Plus are now available to free users, including web browsing, code interpreter, file and image uploads, memories, and access to GPTs and the GPT store. Free users also get limited access to the new GPT-4o model, with approximately 16 messages every three hours.

Free users also get limited access to the new GPT-4o model, with approximately 16 messages every three hours.

Desktop App and Future Plans

OpenAI announced a new desktop app for Mac, with a Windows version expected later this year. Upcoming features include image and video understanding and a potential deal with Apple to integrate ChatGPT into iPhones, indicating a move towards more sophisticated AI assistants across devices.

✨ Special feature: Google I/O

In its annual developer conference last Tuesday, Google announced a host of groundbreaking advancements and new features across its ecosystem, although most of them will only go live later this year.

Here are the key highlights from Google I/O 2024:

Launch of advanced AI models

Google’s Gemini 1.5 Pro is now publicly available and boasts an expanded context window of up to two million tokens, up from 1M. Additionally, Google is releasing a new model, Gemini 1.5 Flash, optimized for speed and cost-efficiency.

Ambitious AI projects and tools

Among the ambitious projects unveiled are Project Astra, a real-time, multimodal AI assistant, and Veo, a long-form video generator competing with OpenAI’s Sora. Google also enhances Workspace with virtual AI teammates for project tracking and data analysis. Lastly, Google announced music and image creation tools, launching MusicLM for music AI and Imagen 3 for photorealistic image generation.

Integration of AI across Google products

Google is embedding AI capabilities, powered by Gemini 1.5 Pro and the newly introduced Gemini 1.5 Flash, throughout its ecosystem. This includes enhanced AI features in Search, Gmail, Google Photos, and Android. Notable updates include AI overviews in Search, advanced photo searching with "Ask Photos," AI-driven email summaries, and draft suggestions in Gmail.

Hardware and infrastructure innovations

The unveiling of the Trillium chip (TPU v6), designed for AI datacenters, marks a significant leap in processing power and energy efficiency. This chip is aimed at meeting the growing demand for AI infrastructure and is positioned as a strong competitor to Nvidia's processors. Additionally, Gemini Nano will bring on-device multimodal capabilities to Pixel devices.

—> More here.

Language Models

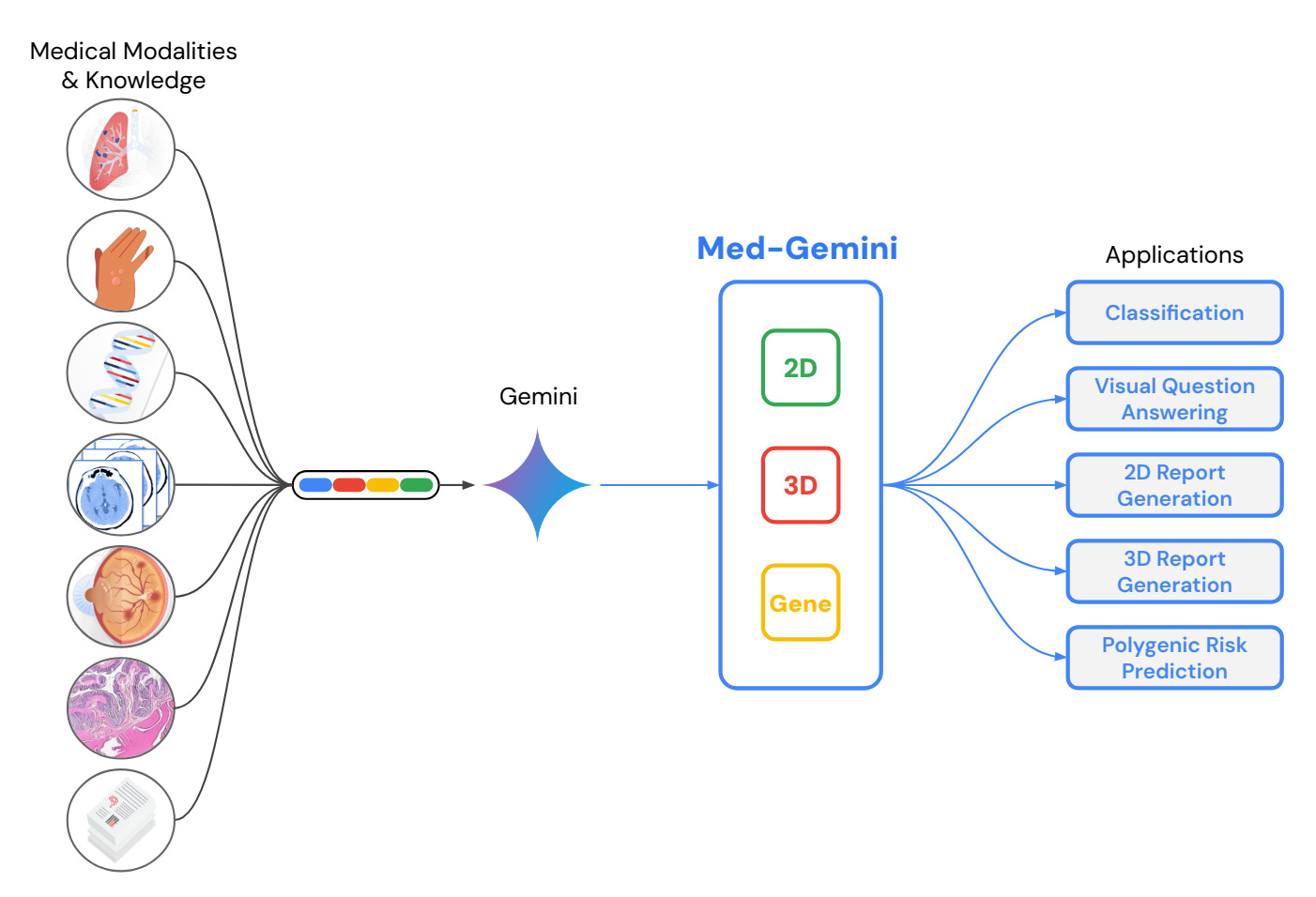

Vision

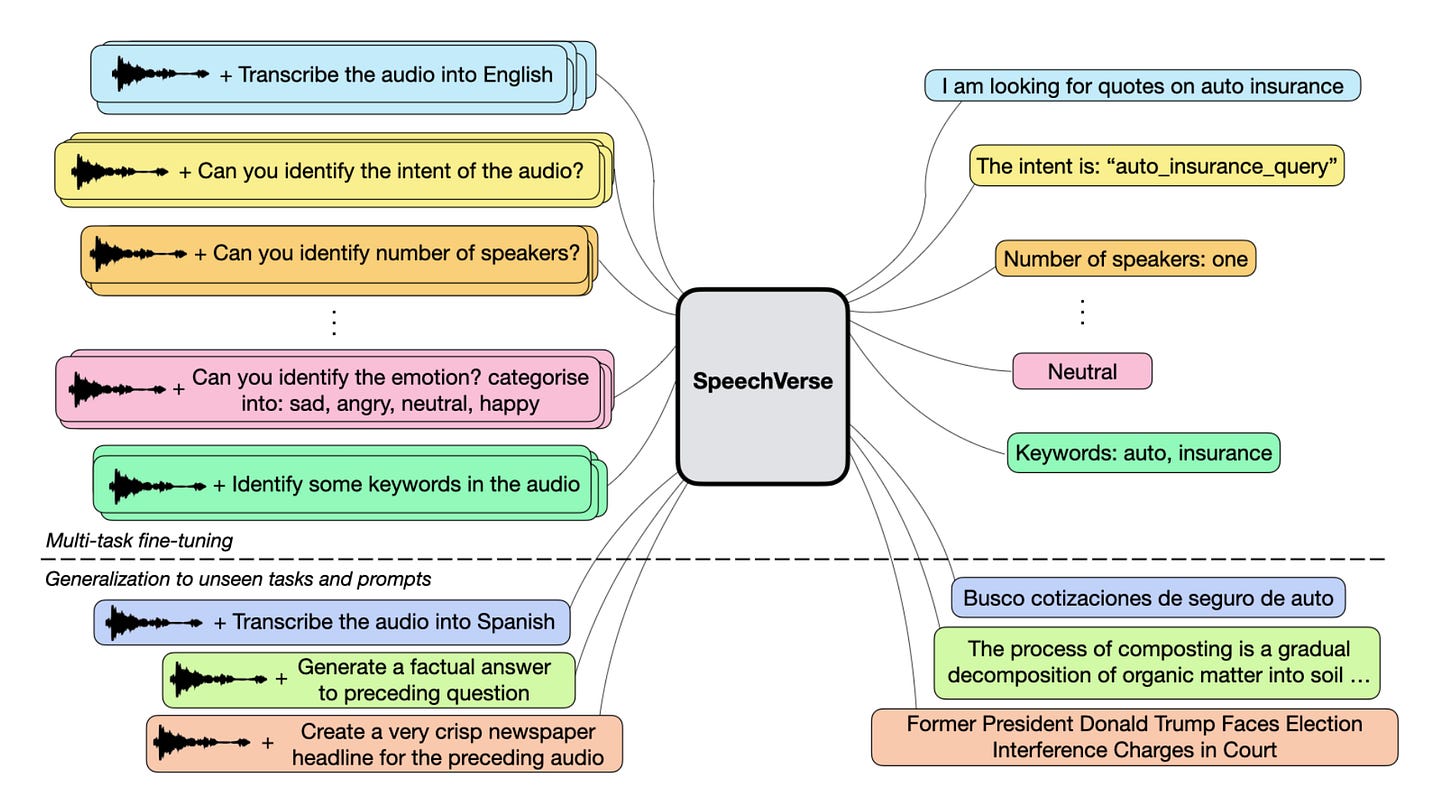

Audio

Open-source Packages

Personal AI - open source library to execute commands on iOS leveraging open models like Llama 3

llm-ui - a React package for displaying rich and formatted LLM output

Pipecat - a framework for voice and multimodal conversational AI

Plus >70 more open-source packages for AI engineers

Last week’s AI Tidbits roundup

Reach AI builders, researchers, and entrepreneurs by partnering with AI Tidbits

If you find AI Tidbits valuable, share it with a friend and consider showing your support.

![temp.mov [optimize output image] temp.mov [optimize output image]](https://substackcdn.com/image/fetch/$s_!hlRO!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F768edf43-2d6c-4def-93ef-3db94b0ef08e_480x854.gif)