Economies of scale for foundational AI models

Big Tech strategic race for defensible AI models

Welcome to AI Tidbits Deep Dives: short posts offering a perspective on AI-related topics. Some of my previous ones covered consolidation in the AI space, autonomous agents, and document extraction with LLMs.

A NotebookLM-powered podcast episode discussing this post:

After listening to a recent podcast featuring Andrej Karpathy1, I've pondered the concept of economies of scale in AI systems, specifically when it comes to data acquisition. The idea of economies of scale originated in the manufacturing world, where increased production led to lower per-unit costs. In the tech world, this concept was adapted by SaaS startups, where the marginal cost of serving an additional customer approached zero. A prime example is Uber, which leveraged its platform to achieve massive scale: as more drivers joined, more riders were attracted, creating a virtuous cycle that dramatically reduced per-ride costs while improving service quality.

Hungry generative AI models drive major tech companies to pursue high-stakes data partnerships, exemplified by OpenAI's agreements with TIME magazine and Reddit and Meta's strategic alliance with Reuters to secure premium training content.

This post explores how economies of scale in the context of data apply to AI and generative models, focusing on three key areas: software vs. hardware, humanoid robots, and large language models.

Scaling AI software vs. hardware - autonomous vehicles

Tesla and Waymo represent two distinct approaches to achieving autonomous driving capabilities. Tesla, under Elon Musk's leadership, has pursued a vision of making self-driving technology accessible to the mass market through its consumer vehicles. Their strategy revolves around deploying a large fleet of cars equipped with cameras and neural networks that learn from real-world driving data. Waymo, originally Google's self-driving car project, has taken a more cautious approach, focusing on developing a robust autonomous driving system using high-end sensors and detailed mapping technology. While both companies aim to revolutionize transportation, their contrasting strategies highlight fundamental differences in how AI systems can be scaled.

Software-driven AI solutions, such as Tesla's AI systems, can scale more efficiently than hardware-based systems like those deployed by Google’s Waymo.

Waymo started deploying its autonomous fleet using Jaguar cars, featuring expensive hardware such as LiDAR, radar, and high-precision GPS to capture and interpret real-time data. Penetrating a market with a high-end offering is a familiar strategy: Uber started with Uber Black before offering the more affordable option, Uber X.

Tesla is less hardware-dependent and mostly relies on cameras, which are substantially cheaper: a fully deployed Waymo costs $200k compared to Tesla's Model 3 starting price of ~$39k.

Software has a distribution advantage—once built, it can be deployed and iterated across millions of devices, i.e. vehicles, with minimal additional cost. In contrast, hardware solutions are constrained by the need for physical components like sensors, processors, and maintenance, which scale more slowly and are harder to replicate.

In this case, Waymo is limited by its deployed hardware because its models are tightly coupled with their underlying sensor data.

Also, unlike Tesla, Waymo's pace of data acquisition is a function of the number of rides it provides, i.e., utilization rate. This is why partnership deals like the one with Uber make sense.

While many view Waymo’s partnership with Uber as primarily a commercial move to increase revenues, the real strategic value lies in the diverse data it gathers from Uber’s wide geographic spread of drivers and riders, both across the U.S. and, potentially, globally. The Uber partnership could also serve as a stepping stone for Waymo to expand into broader delivery services. Imagine collaborations with major retailers like Walmart or Target, or fast-food giants such as McDonald’s—directly challenging Uber and logistics providers like UPS in the last-mile delivery race, generating even more data to improve its underlying self-driving technology.

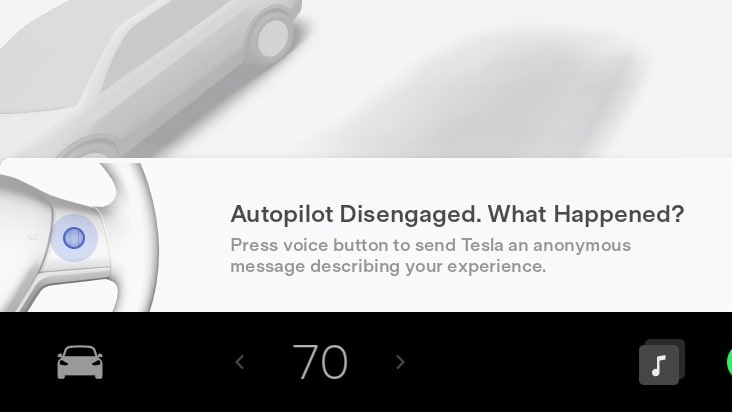

But beating Tesla on data quantity isn’t a simple feat. Each day, Tesla drivers drive 137 million miles, generating and sending data that includes human overrides, external cameras' video, location and trip logs, and, in some cases, footage from in-cabin cameras—an endless stream of real-world human-labeled data. Recognizing the value of detailed data, Tesla made it extremely easy for drivers to contribute richer feedback by allowing them to provide voice input immediately after disengaging the autopilot system.

It's not only about the quantity. Tesla benefits from the diversity of data its drivers generate—different driving styles, varied terrains, and ever-changing weather conditions.

More data * More diverse data == faster iteration and deployment of better AI models.

So, even though Waymo is objectively ahead with real-world autonomous rides across California, Arizona, and Texas, Tesla could catch up quickly with a software update across its vehicle fleet. Waymo, on the other hand, would likely require a change in its sensor hardware, leading to scale issues.

Become a premium to access the LLM Builders series, $1k in free credits for leading AI tools and APIs, and editorial deep dives into key topics like OpenAI's DevDay and autonomous agents.

Many readers expense the paid membership from their learning and development education stipend.Humanoid robots and teleoperations - Better Labeled Data

Another key insight involves how humanoid robots tackle real-world environments.

I once thought the primary value of humanoid robots was their relatable, user-friendly design. Picture a square robot on wheels navigating your home versus a humanoid—it’s clear why human-like robots feel more intuitive and approachable.

But, human-like robots play another significant role - they allow humans to operate them remotely, also known as teleoperations. For example, in a manufacturing setting, a skilled technician can wear motion-tracking equipment to guide a humanoid robot through complex assembly tasks, like connecting delicate electronic components or threading wires through tight spaces. The robot mirrors the technician's precise hand movements and finger positions in real-time.

This approach is crucial for gathering high-quality labeled data in real-world conditions. Through teleoperations, companies like Figure can collect diverse, precisely labeled data that mirrors human decision-making in complex environments. Such data is critical for training AI systems to perform effectively in real-world scenarios.

As Karpathy notes in the podcast, there's a significant transfer from automotive AI to humanoid robotics. Tesla's Optimus robot initially used the same computer and cameras as Tesla cars, showcasing how foundational AI systems can be adapted across different applications. This cross-pollination of technology and data between automotive and humanoid robotics accelerates development and scaling in both domains.

Economies of scale for LLMs

Language models also benefit from scale:

Inference becomes cheaper as server utilization is more predicable at scale, allowing tailored hardware and software optimization

Broader distribution means more users, which then generate more data. Data is the #1 blocker for generative AI companies, making scale crucial for continued improvement.

Every user interaction with ChatGPT's feedback system, from rating responses to choosing between alternatives, becomes valuable training data. For example, when ChatGPT users click the thumbs-down icon or select their preferred generation out of two options, this feedback is stored in an internal database. Later, it can be used to evaluate future models or apply Reinforcement Learning from Human Feedback (RLHF), helping to better align the model for ChatGPT users or, even better, personalizing responses based on individual user preferences and interaction patterns

Theoretically, OpenAI and other model providers can go as far as clustering users according to demographics like age and political views to better align ChatGPT’s response. When a model is better aligned with users, it becomes more engaging, increasing both usage and data generation, continuously fueling the improvement cycle in a positive feedback loop.

OpenAI started collecting more detailed usage feedback for its new Advance Voice Mode

Meta's launch of its AI chatbot is a prime example of this strategy. By integrating AI assistants into widely used platforms like Facebook and Instagram, Meta can collect vast amounts of real-world interaction data. Such data is critical for improving its models’ performance and adaptability across diverse contexts.

Such a strategy goes beyond language models, expanding to image, video, and audio. By contributing to the open-source AI ecosystem with the multimodal Llama and the state-of-the-art image segmentation model Segment Anything 2, Meta leverages both users and AI developers to improve the same underlying technology the powers Instagram, WhatsApp, and its recent blockbuster, the Meta Ray-Ban.

The Future of AI Systems

The next frontier in AI development involves:

Balancing software scalability and hardware constraints

Building data collection devices (e.g. robots) that resemble the real world to benefit from transfer learning and easier human labeling

Maximizing user distribution for (a) data collection (see Meta’s example above) and (b) brand recognition

Tech giants like Google and Microsoft already position themselves for this AI-dominated future, recognizing the critical role of economies of scale in their race to tomorrow's AI landscape.

From autonomous vehicles to humanoid robots and LLMs, the ability to efficiently scale both software and data collection is becoming a key differentiator. As AI continues to evolve, companies that can effectively leverage these economies of scale will likely lead the way in innovation and market dominance.

![temp.mov [optimize output image] temp.mov [optimize output image]](https://substackcdn.com/image/fetch/$s_!IZxo!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0c26a37e-faa2-431b-9461-9c2eef61bc20_600x338.gif)

One question about the economies of scale here- what about the impact of loyalty and ease of switching. One reason SoMe platforms benefit from platform plays is that once you're on them- there are huge costs to switching. This is not something platforms like Uber and/or LLMs can replicate yet- which means they're much more susceptible to price wars.

I was amazed when Meta launched their AI assistant and leveraged their massive existing platforms to instantly achieve what would take most companies years to build - an enormous user base for their AI by seamlessly integrating it into Instagram, WhatsApp, and other popular platforms where billions are already engaged.