The Voice Agents Toolkit for Builders

Curated frameworks, tools, and libraries to launch reliable and efficient voice agents

Welcome to a new post in the AI Agents Series - helping AI developers and researchers deploy and make sense of the next step in AI.

A NotebookLM-powered podcast episode discussing this post:

Over the past few weeks, I've explored how AI agents will fundamentally reshape the internet. From introducing the concept of "agent-responsive design" - where websites optimize for AI interaction - to examining the infrastructure needed for agent-to-agent communication protocols, my previous posts laid the groundwork for understanding the agent-centric future of the web.

Now, it's time to tackle perhaps the most natural and transformative interface for these agents: voice.

While text-based agents have dominated the early wave of AI applications, voice represents the next frontier in human-AI interaction. It's not just another interface - it's the most intuitive and accessible way for humans to interact with AI systems. This intersection of voice technology and AI agents creates unprecedented opportunities for developers, so I'm excited to share this comprehensive guide to the voice AI ecosystem.

After decades of frustrating experiences with scoped voice assistants that cannot be interrupted and follow a narrow rule-based script, we're witnessing a fundamental shift in what's possible. Three key developments drive this shift:

Breakthrough in speech-native models - the release of OpenAI's Realtime API last October and Google's Gemini 2.0 Realtime Multimodal API last week mark a transition from traditional "cascading architectures" (where speech is converted to text, processed, and converted back) to speech-native models that can process audio directly with unprecedented quality. With OpenAI's recent 60% Realtime API price reduction and the hiring of WebRTC's founder, we're seeing a clear industry push toward making real-time voice interactions accessible and affordable.

Dramatic reduction in complexity - what previously required hundreds of data scientists can now be achieved by small teams of AI engineers. We're seeing companies reach substantial ARR with lean teams by building specialized voice agents for specific verticals - from restaurant order-taking to lead qualification for sales teams.

Infrastructure maturity - the emergence of robust developer platforms and middleware solutions has dramatically simplified voice agent development. These tools handle complex challenges like latency optimization, error handling, and conversation management, allowing developers to focus on building unique user experiences.

This convergence creates a unique opportunity for builders. For the first time in human history, we have a god-like AI systems that converse like humans. The era of capable voice AI has arrived, opening up vast opportunities for innovators and developers alike.

Unlike web or mobile app development, where patterns are well-established, voice AI is still in its formative stage. The winners in this space will be those who can combine technical capability with a deep understanding of specific industry needs.

In this post, I'll provide a well-curated overview of the open-source and commercial tools available for developers building voice agents. While VCs segment the market based on investment opportunities, I'll map the ecosystem based on what matters to developers: APIs, SDKs, and tools you can actually use today. What is the go-to model for speech-to-text? The API for synthesis speech? Which tools do other builders rely on to develop voice agents? With the holiday season upon us, there's no better time to build your voice agent, turn it into a company, or automate a personal workflow.

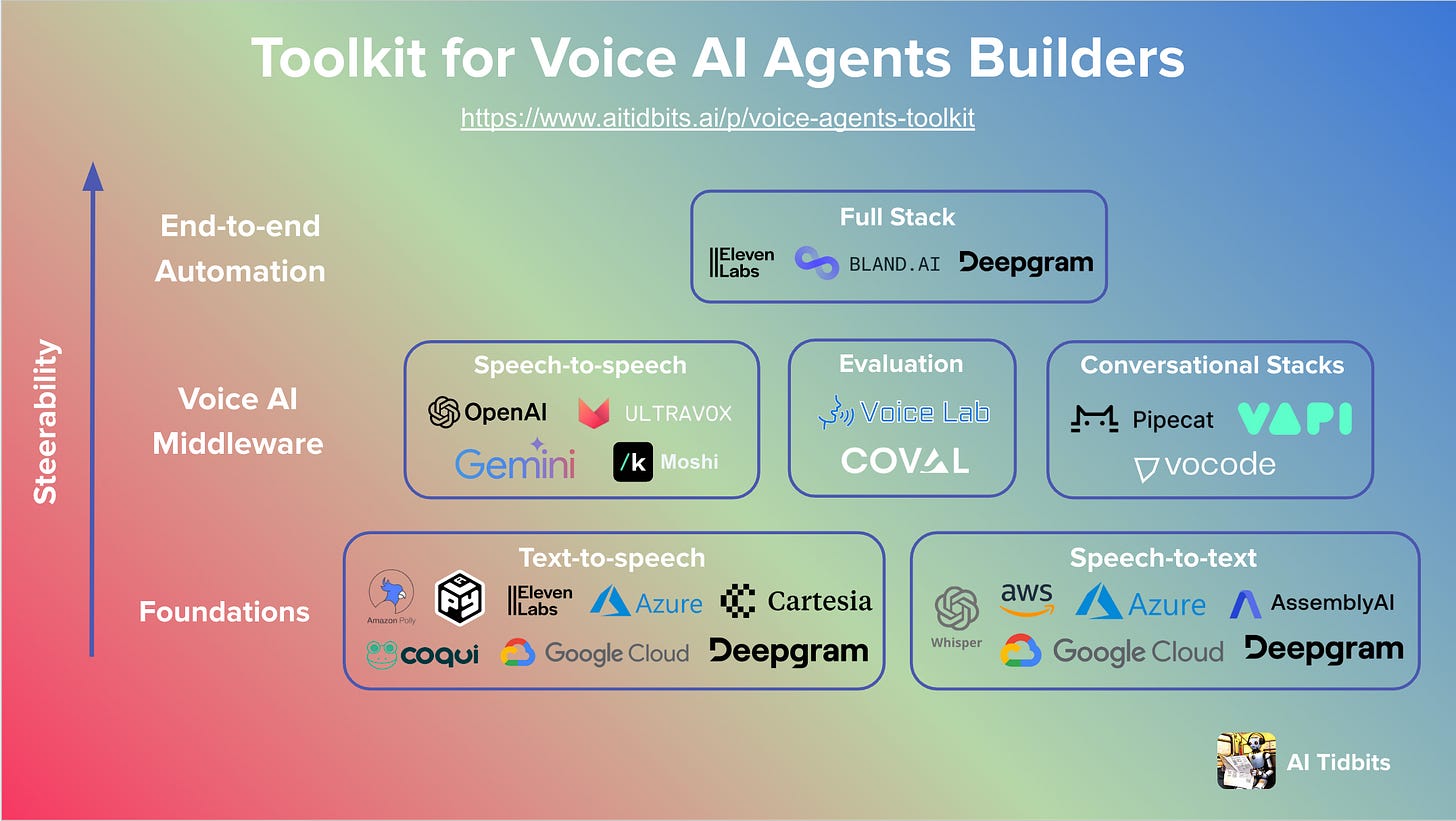

Categories covered in this piece, along with my recommended stack and tips for choosing the right architecture at the end:

Foundations

→ Speech-to-text

→ Text-to-speech

Voice AI Middleware

→ Speech-to-speech

→ Conversational Agents Frameworks

→ Evaluation

End-to-end Automation

→ Full Stack

Missing package or tool you think should have been part of this list? Comment on this post and I’ll take a look.

Become a premium AI Tidbits subscriber and get over $1k in free credits to build AI agents with Vapi, Claude, and other leading AI tools (Hugging Face, Deepgram, etc.), along with exclusive access to the LLM Builders series and in-depth explorations of crucial topics, such as the future of the internet in an era driven by AI agents.

Many readers expense the paid membership from their learning and development education stipend.Foundations

Speech-to-text (STT)

Automated transcription models have improved significantly over the past couple of years, featuring low latency and cost along with a decreasing WER (Word Error Rate). When selecting a speech-to-text model, consider these key factors:

Latency requirements - for real-time conversations, you need models that can process speech with < 300ms latency. Cloud APIs like Assembly AI and Deepgram excel here, while Whisper variants are better suited for async transcription.

Deployment constraints - open source models like Whisper.cpp offer flexibility for edge deployment and privacy-sensitive applications but require more engineering effort to optimize. Cloud APIs provide easier scaling but with higher operational costs.

Language and accent support - while most models handle standard English well, performance varies significantly for other languages and accents. Whisper has broad language support, while specialized APIs like Assembly AI may offer better accuracy for specific use cases.

Open-source (mostly Whisper-based)

Commercial

Cloud Providers: Azure, Google, AWS. There don’t seem to be significant differences between the cloud providers, so I usually opt for the one I already use for other services like storage and compute.

👆 RealtimeSTT featured low-latency transcription with wake word activation

Text-to-speech (TTS)

Text-to-speech, once dominated by ElevenLabs’ costly API as of June 2023, has evolved into a competitive market with numerous low-latency, cost-effective alternatives. Modern TTS systems have evolved far beyond simple voice synthesis, offering capabilities like:

Voice cloning - creating custom voices from just a few minutes of audio samples, enabling personalized agent voices

Emotional synthesis - adjusting tone, pace, and emphasis to convey different emotions and speaking styles

Multi-speaker synthesis - seamlessly switching between different voices in a single conversation

The most significant recent advancement is the emergence of "speech-native" models that can generate highly natural speech without the traditional text-intermediate step. This enables more fluid conversations with lower latency and better preservation of emotional nuances.

Open-source

Commercial

ElevenLabs (just released Flash—a 75ms latency model in 32 languages)

Other popular APIs: Play, Amazon Poly, Azure AI Speech, Google

👆 Hello Patient’s voice agent is powered by Cartesia

Become a premium AI Tidbits subscriber and get over $1k in free credits to build AI agents with Vapi, Claude, and other leading AI tools (Hugging Face, Deepgram, etc.), along with exclusive access to the LLM Builders series and in-depth explorations of crucial topics, such as the future of the internet in an era driven by AI agents.

Many readers expense the paid membership from their learning and development education stipend.Voice AI Middleware

Speech-to-speech

OpenAI sparked everyone’s imagination when they demoed GPT-4o last April, showcasing real-time conversational AI that can be interrupted. Until a few months ago, this kind of technology wasn’t available to developers. Now, we have commercial APIs at a reasonable price (OpenAI dropped the price for its Realtime API by 60% last week) and open-source alternatives.

Speech-to-speech models are specialized AI systems that process and respond to voice input directly in the audio domain without converting to and from text. They are superior to cascading workflows that involve sequential speech-to-text, text-to-text, and text-to-speech models for several key reasons:

Ultra-low latency - by processing audio natively, these models achieve response times of ~300 milliseconds, matching natural human conversation speeds. Traditional cascading approaches often exceed 1000ms, creating noticeable delays.

Better contextual understanding - speech-to-speech models preserve important non-textual elements like tone, emotion, and conversation dynamics that are often lost in text conversion.

Natural interruptions - unlike cascading systems that require rigid turn-taking, speech-native models can listen and process input even while speaking, enabling natural interruptions and more fluid conversations.

Improved conversation quality - by maintaining the audio context throughout the interaction, these models better handle overlapping speech, background noise, and the natural rhythm of human dialogue.

Open-source

Commercial

👆 Conversing with Google’s new Gemini 2.0 (source: Gemini Playground)

Conversational Agents Frameworks

Conversational agent frameworks provide the essential scaffolding needed to build production-ready voice AI applications. These frameworks abstract away much of the complexity in managing real-time voice infrastructure, handling edge cases, and orchestrating the various components of a voice application. Pipecat offers an open-source approach for developers who want complete control over their stack and need flexibility to build custom multimodal experiences. Vocode provides a more opinionated toolkit that simplifies the process of creating voice-based LLM agents, handling much of the complexity around conversation management and error handling. Vapi takes this further by offering a full-featured platform that abstracts away infrastructure challenges while providing enterprise-grade reliability and scalability. The choice between these frameworks often comes down to the specific requirements of your project - whether you need the flexibility of open-source, the simplicity of a focused toolkit, or the robustness of a complete platform.

Open-source

Commercial

Evaluation

One of the most significant challenges in voice AI development is accurately measuring agent performance. Unlike text-based interactions, where metrics like response accuracy and latency are relatively straightforward, voice agents require a more nuanced evaluation approach that considers elements like conversation flow, emotional appropriateness, and real-world task completion.

Technical performance metrics form the foundation of evaluation, starting with response latency - which should target under 300ms for natural conversation and be measured across different percentiles, while speech recognition accuracy is tracked through Word Error Rate (WER) with special attention to domain-specific terminology. The second dimension focuses on conversational quality metrics that measure the agent's ability to handle natural dialogue. This includes turn management metrics like interruption handling and end-of-speech detection, along with task completion metrics such as success rate and the number of turns needed to complete a task. User experience metrics round out the evaluation framework, tracking call abandonment rates, average call duration, user sentiment scores, and the frequency of repeat requests.

When done right, proper evaluation becomes a game-changing unlock - it enables developers to identify subtle issues that could frustrate users (like awkward pauses or mismatched emotional responses), optimize for natural conversation patterns, and ensure consistent performance across different accents and background noise conditions.

Open-source

Commercial

End-to-end Automation

Full Stack

The Stripe for voice agents. From prompt to a call.

While building with individual components offers maximum flexibility, full-stack solutions make sense when you need to quickly deploy production-ready voice agents without managing infrastructure complexity. These platforms are particularly valuable for teams that need to focus on their core business logic and customer experience rather than wrestling with the intricacies of voice infrastructure, latency optimization, and scaling challenges.

These solutions typically provide end-to-end capabilities, including pre-built integrations with popular business systems (CRMs, scheduling tools, payment processors), sophisticated error handling, automated failover, and comprehensive analytics. They handle complex engineering challenges like maintaining low latency during peak loads, graceful degradation during API failures, and automated quality monitoring. While you trade some flexibility compared to building your own stack, these platforms can dramatically accelerate time-to-market and reduce engineering overhead.

👆 A Deepgram-powered voice agent handles a food order

Choosing the right architecture for your voice agent

When selecting a voice AI architecture, developers face a fundamental choice between using full-stack platforms and assembling custom solutions from individual components. This decision ultimately comes down to three key factors: latency requirements, cost constraints, and the need for fine-grained control over the conversation flow.

Keep reading with a 7-day free trial

Subscribe to AI Tidbits to keep reading this post and get 7 days of free access to the full post archives.