September 2023 - AI Tidbits Monthly Roundup

OpenAI's DALL-E 3 and multimodal GPT, novel prompting techniques paving the way to reliable AI, new LLMs for extracting information from complex documents, and a SOTA model from French OpenAI

Welcome to a subscriber-only edition 🔒 of AI Tidbits, where I curate the firehose of AI research papers and tools so you won’t have to.

This is the monthly curated round-up, so if you're pressed for time and can only catch one AI Tidbits edition, this is the one to read—featuring the absolute must-knows.

If you find AI Tidbits valuable, share it with a friend and consider showing your support.

Welcome to the September edition of AI Tidbits, where we unravel the latest and greatest in AI. September marked a particularly innovative month, overflowing with groundbreaking research and product launches that showcase the astounding pace of progress in AI.

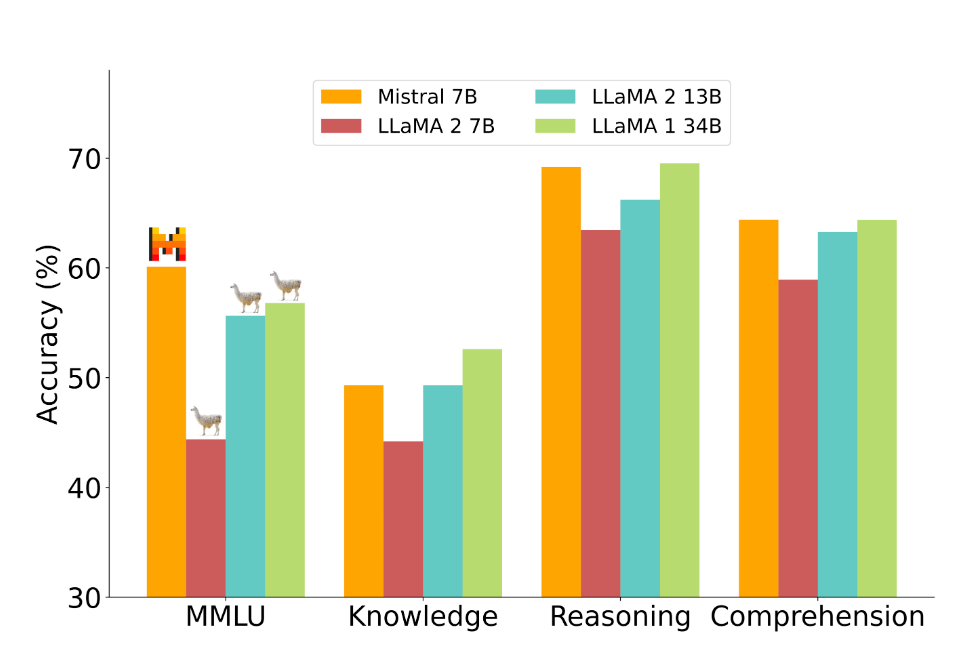

Leading the charge were new prompting techniques to substantially boost the accuracy of language models while reducing hallucinations. These advances pave the way for more reliable, trustworthy AI systems. On the open-source front, Mistral and Abacus released powerful new models, Mistral 7B and Giraffe 70B, respectively, outperforming previous open-source models such as Llama 2.

Commercially, heavy hitters like OpenAI, Google, and Meta charged ahead with high-profile releases. OpenAI unveiled DALL-E 3 and the long-anticipated multimodal GPT-4 Vision, while Google released a substantial update to its Bard chatbot, and Meta announced its own swarm of LLM-powered chatbots.

These and many more exciting updates across multimodal AI, video models, and open-source tools are part of this month’s roundup.

Let's dive in!

Overview

Large Language Models

Prompting techniques (5 entries)

Open-source (8 entries)

Research (8 entries)

Commercial (5 entries)

Autonomous Agents (3 entries)

Image, Audio, and Video (8 entries)

Multimodal (4 entries)

Cool Tools (3 entries)

Open-source (3 entries)

Other (3 entries)

Recent AI Tidbits Deep Dives

Large Language Models (LLMs)

Special feature: Prompting techniques

Open-source

Research

Keep reading with a 7-day free trial

Subscribe to AI Tidbits to keep reading this post and get 7 days of free access to the full post archives.