LinkedIn Highlights, Mar 2025

Document AI breakthroughs from GOT-OCR, Maestro, and Mistral, Vellum's agent autonomy framework, Skyvern's visual web automation, plus performance tips for ChatGPT and reasoning models

Welcome to LinkedIn Highlights!

Each month, I'll share my five seven top-performing LinkedIn posts, bringing you the best of AI straight from the frontlines of academia and industry. This edition includes seven posts instead of five—there were just too many good ones to leave out!

This post covers groundbreaking developments in AI agents and document processing, from Anthropic's foundational patterns for building effective agents to LlamaIndex's new Agentic Document Workflows. You'll learn about DeepSeek's surprising findings about prompting reasoning models, cutting-edge tools for PDF processing and web automation, and explore how LLMs handle structured table data.

As a frequent LinkedIn contributor, I regularly share insights on groundbreaking papers, promising open-source packages, and significant AI product launches. These posts offer more depth and detail than our weekly snippets, providing a comprehensive look at the latest AI developments.

Whether you're not on LinkedIn or simply missed a post, this monthly roundup ensures you stay informed about the most impactful AI news and innovations.

1. GOT-OCR 2.0

I finally had the chance to explore a new document extraction technique introduced in a paper last September. Bonus: the code and model are free to use (Apache 2.0).

This new approach, called General OCR Theory (GOT-OCR2.0), suggests a unified end-to-end model that handles tasks traditional OCR systems struggle with.

Unlike legacy OCR, which relies on complex multi-modular pipelines, GOT uses a simple encoder-decoder architecture with only 580M parameters that outperforms models 10-100× larger.

Paper highlights:

Unified architecture - a high-compression encoder paired with a long-context decoder that handles everything from scene text to complex formulas

Stunning performance - delivers nearly perfect text accuracy on documents, surpassing Qwen-VL-Max (>72B) and other leading models

Versatility beyond text - processes math formulas, molecular structures, and even geometric shapes

Interactive capabilities - supports region-level recognition guided by coordinates or colors

I just tried it out and was blown away by how it handles complex documents with mixed content types. The ability to convert math formulas from Arxiv PDFs to Mathpix format alone is worth exploring this model.

What strikes me most about GOT is how it challenges the notion that only billion-parameter LLMs can tackle complex visual tasks.

Paper + code + model can be found in their GitHub repo https://github.com/Ucas-HaoranWei/GOT-OCR2.0

Last month’s LinkedIn Highlights

2. Six Levels of Agenic Behavior

I came across a new framework that brings clarity to the messy world of AI agents with a 6-level autonomy hierarchy.

While most definitions of AI agents are binary (it either is or isn't), a new framework from Vellum introduces a spectrum of agency that makes far more sense for the current AI landscape.

The six levels of agentic behavior provide a clear path from basic to advanced:

𝐋𝐞𝐯𝐞𝐥 0 - 𝐑𝐮𝐥𝐞-𝐁𝐚𝐬𝐞𝐝 𝐖𝐨𝐫𝐤𝐟𝐥𝐨𝐰 (𝐅𝐨𝐥𝐥𝐨𝐰𝐞𝐫)

No intelligence—just if-this-then-that logic with no decision-making or adaptation. Examples include Zapier workflows, pipeline schedulers, and scripted bots—useful but rigid systems that break when conditions change.

𝐋𝐞𝐯𝐞𝐥 1 - 𝐁𝐚𝐬𝐢𝐜 𝐑𝐞𝐬𝐩𝐨𝐧𝐝𝐞𝐫 (𝐄𝐱𝐞𝐜𝐮𝐭𝐨𝐫)

Shows minimal autonomy—processing inputs, retrieving data, and generating responses based on patterns. The key limitation: no control loop, memory, or iterative reasoning. It's purely reactive, like basic implementations of ChatGPT or Claude.

𝐋𝐞𝐯𝐞𝐥 2 - 𝐔𝐬𝐞 𝐨𝐟 𝐓𝐨𝐨𝐥𝐬 (𝐀𝐜𝐭𝐨𝐫)

Not just responding but executing—capable of deciding to call external tools, fetch data, and incorporate results. This is where most current AI applications live, including ChatGPT with plugins or Claude with Function Calling. Still fundamentally reactive without self-correction.

𝐋𝐞𝐯𝐞𝐥 3 - 𝐎𝐛𝐬𝐞𝐫𝐯𝐞, 𝐏𝐥𝐚𝐧, 𝐀𝐜𝐭 (𝐎𝐩𝐞𝐫𝐚𝐭𝐨𝐫)

Managing execution by mapping steps, evaluating outputs, and adjusting before moving forward. These systems detect state changes, plan multi-step workflows, and run internal evaluations. Examples like AutoGPT or LangChain agents attempt this, though they still shut down after task completion.

𝐋𝐞𝐯𝐞𝐥 4 - 𝐅𝐮𝐥𝐥𝐲 𝐀𝐮𝐭𝐨𝐧𝐨𝐦𝐨𝐮𝐬 (𝐄𝐱𝐩𝐥𝐨𝐫𝐞𝐫)

Behaving like stateful systems that maintain state, trigger actions autonomously, and refine execution in real-time. These agents "watch" multiple streams and execute without constant human intervention. Cognition Labs' Devin and Anthropic's Claude Code aspire to this level, but we're still in the early days, with reliable persistence being the key challenge.

𝐋𝐞𝐯𝐞𝐥 5 - 𝐅𝐮𝐥𝐥𝐲 𝐂𝐫𝐞𝐚𝐭𝐢𝐯𝐞 (𝐈𝐧𝐯𝐞𝐧𝐭𝐨𝐫)

Creating its own logic, building tools on the fly, and dynamically composing functions to solve novel problems. We're nowhere near this yet—even the most powerful models (o1, o3, Deepseek R1) still overfit and follow hardcoded heuristics rather than demonstrating true creativity.

The framework shows where we are now: production-grade solutions up to Level 2, with most innovation happening at Levels 2-3. This taxonomy helps builders understand what kind of agent they're creating and what capabilities correspond to each level.

Full report https://www.vellum.ai/blog/levels-of-agentic-behavior

Become a premium member to access the LLM Builders series, $1k in free credits for leading AI tools and APIs, and editorial deep dives into key topics like AI Voice Agents. It's also a great way to show your support :)

Many readers expense the paid membership from their learning and development education stipend.3. Skyraven

Traditional web automation is dying as developers waste countless hours maintaining brittle XPath selectors. Skyvern, a new open-source package, revolutionizes browser automation by combining LLMs with computer vision.

Unlike traditional automation tools that break when websites change, Skyvern uses visual understanding and natural language processing to dynamically interpret and interact with web interfaces. This enables developers to:

→ Build website-agnostic automations - create workflows that work across multiple sites without custom code

→ Handle complex inference tasks - automatically reason through form responses like eligibility questions

→ Execute multi-step sequences - coordinate multiple agents for tasks like authentication, navigation, and data extraction

Packages like Skyvern signal the emergence of truly adaptable web agents. Instead of hard-coded rules, we see AI systems that can understand and navigate the web like humans do - reading content, making decisions, and handling edge cases autonomously. I wrote more about it in my latest AI Agents blog series.

GitHub repo https://github.com/Skyvern-AI/skyvern

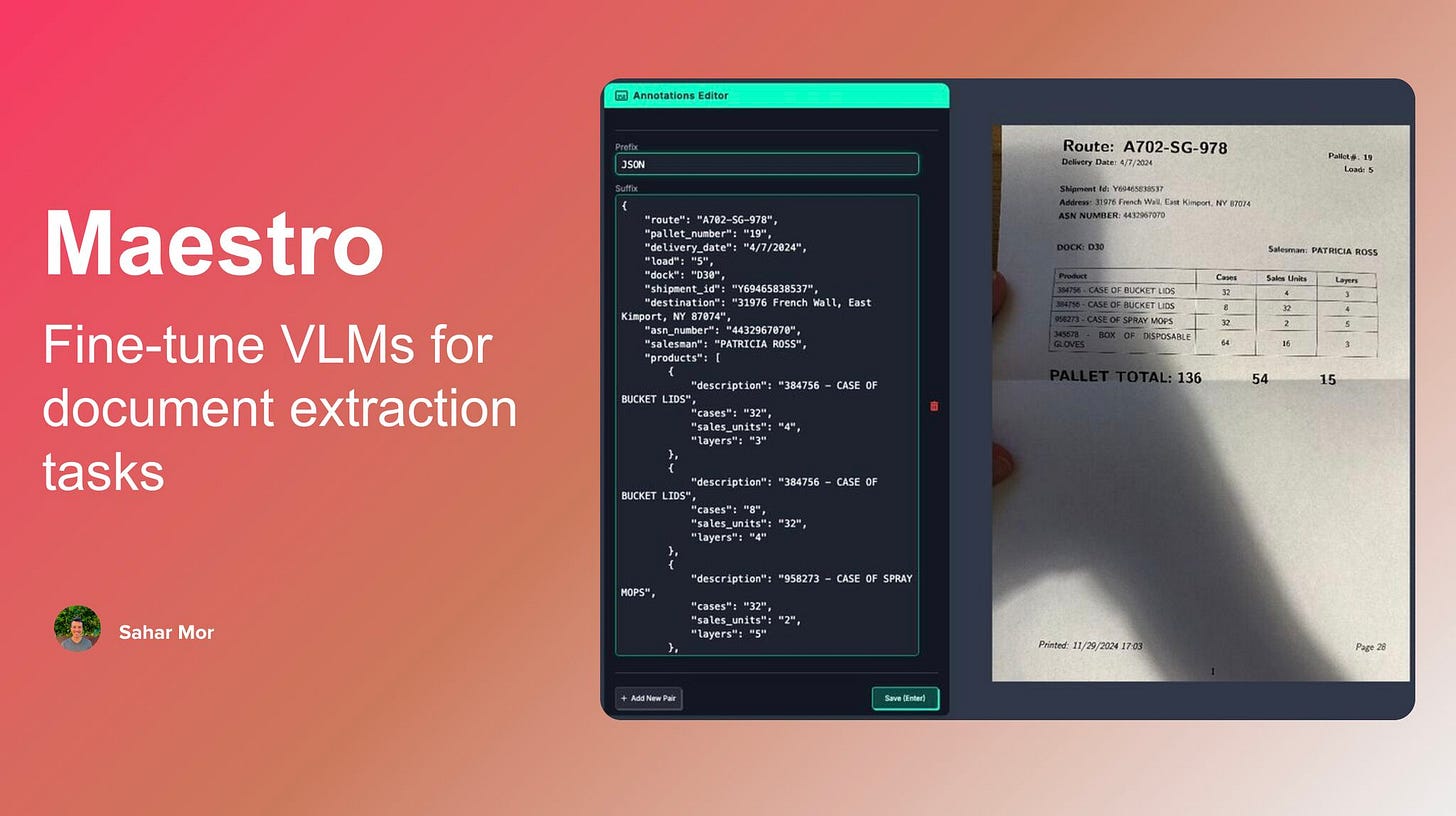

4. Maestro

Keep reading with a 7-day free trial

Subscribe to AI Tidbits to keep reading this post and get 7 days of free access to the full post archives.